Introduction

This is a collection of articles about how to do stuff, mostly technical. Learn more.

Note

If an operating system is not specified, it should be assumed that instructions in a given article are for Linux, Debian-based.

Recently Added

| Title | Added |

|---|---|

| Gradle Quick Start | January 30, 2026 |

| Get Path For Executable (.NET/C#) | November 30, 2025 |

| Manual Installation of .NET SDK in Linux | November 14, 2025 |

| Generating Thumbnails for Video and Audio Files In Ubuntu | November 6, 2025 |

| Neovim Qt Startup Error | October 11, 2025 |

| Maven Example | September 28, 2025 |

| Install Snapd on Debian | September 6, 2025 |

| Quarto Special Markup | September 3, 2025 |

Pinned

Learning Paths

Articles for specific topics.

Embedded and IoT

| Title | Description |

|---|---|

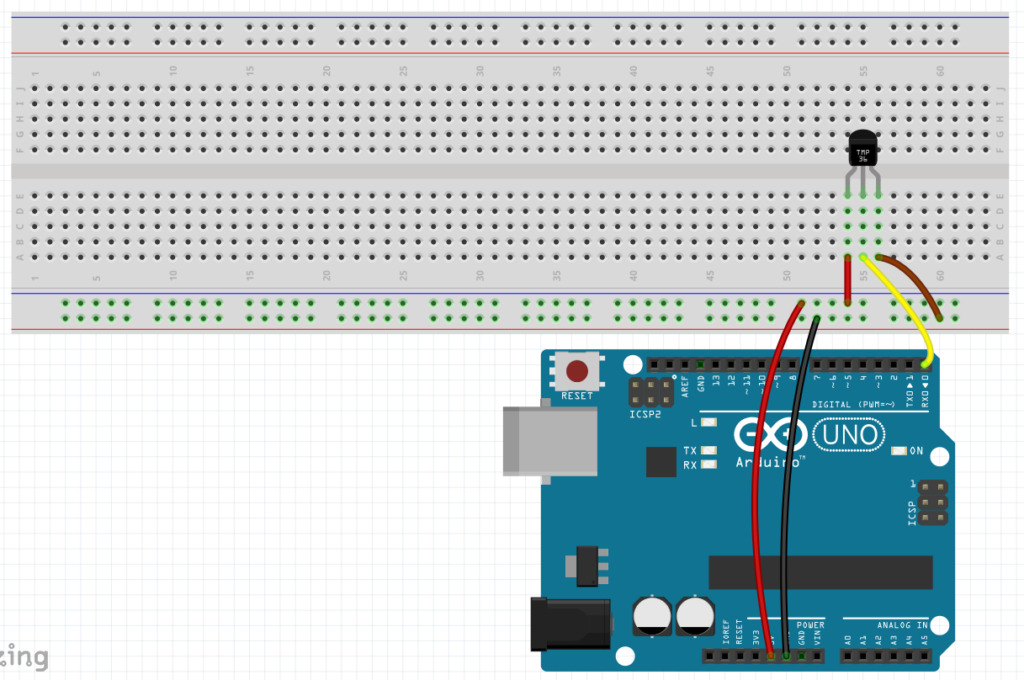

| Arduino / Raspberry Pi Remote Sensor | |

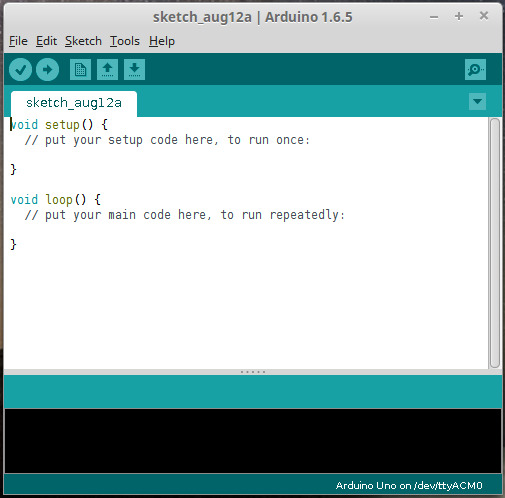

| Basic Arduino Uno Dev Setup in Linux (Debian-based) | |

| Command-Line Arduino | Using the arduino-cli command line tool to work with Arduino boards. |

| .NET IoT | Accessing IoT devices from .NET/C#. |

| MicroPython on ESP32 | Configuring and using the MicroPython environment on ESP32 boards. |

| Online IoT/Embedded Simulators | |

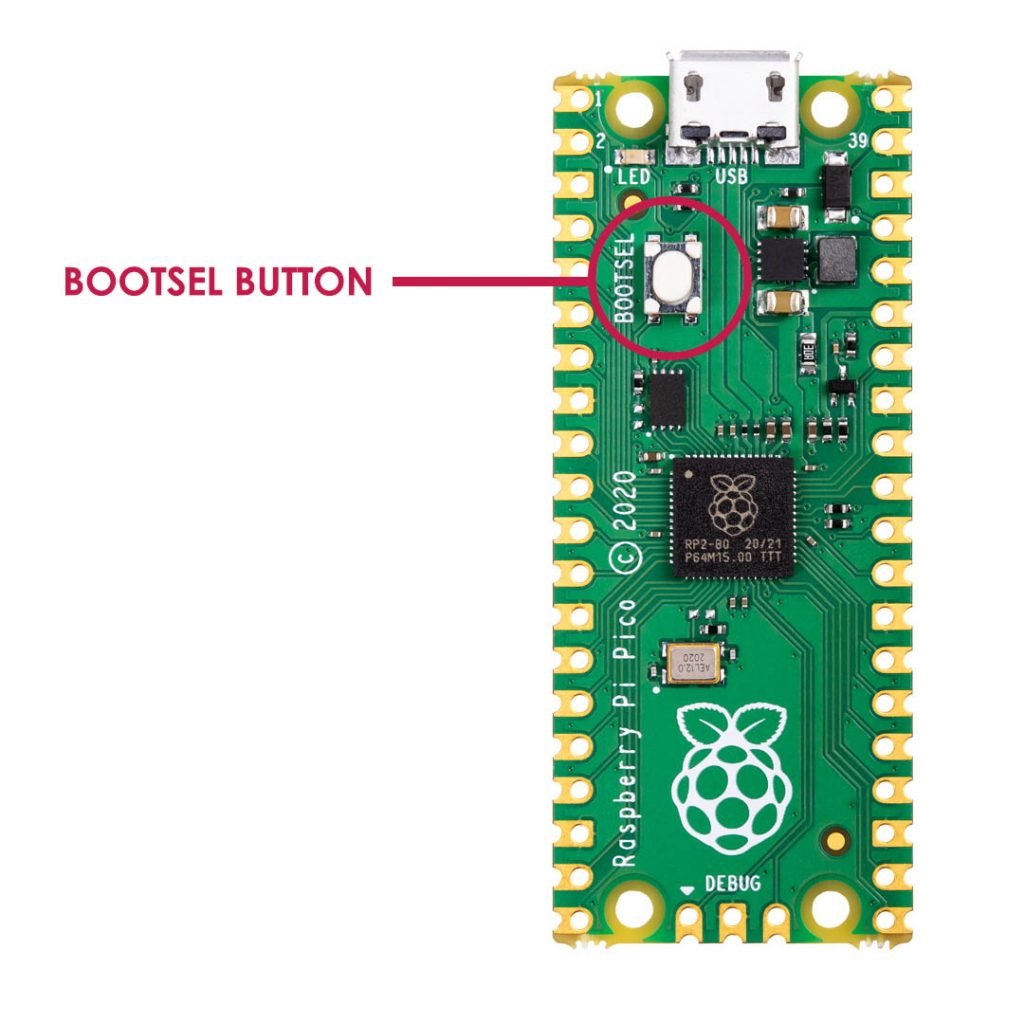

| Programming Arduino (AVR) and Raspberry Pi Pico (ARM) in C | |

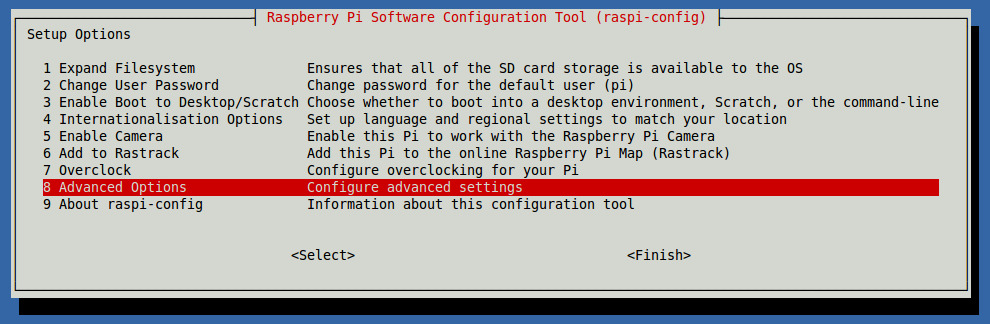

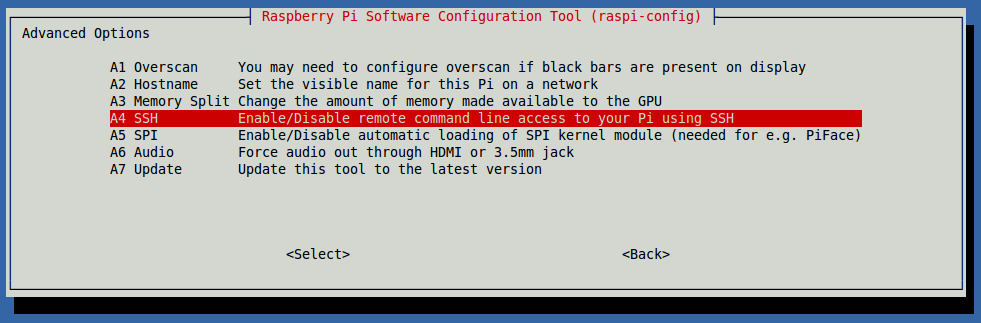

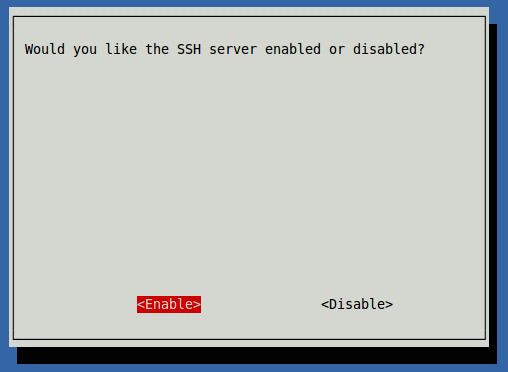

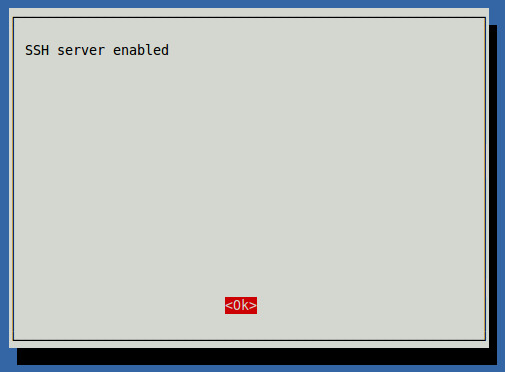

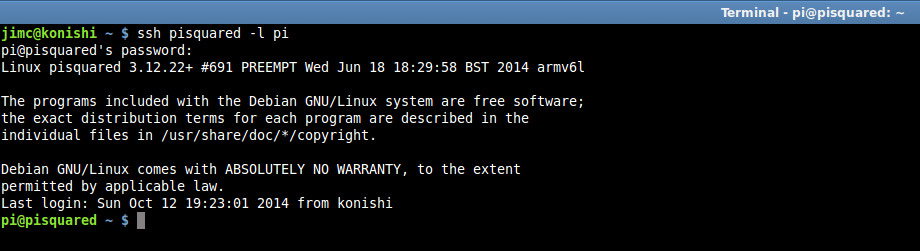

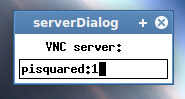

| Remote Access for Raspberry Pi | Configuring and using SSH with Raspberry Pi. |

| Sense HAT | Working with the Sense HAT multi-sensor peripheral board. |

| Simple Raspberry Pi Control With .NET IoT and Python |

Low-Level

| Title | Description |

|---|---|

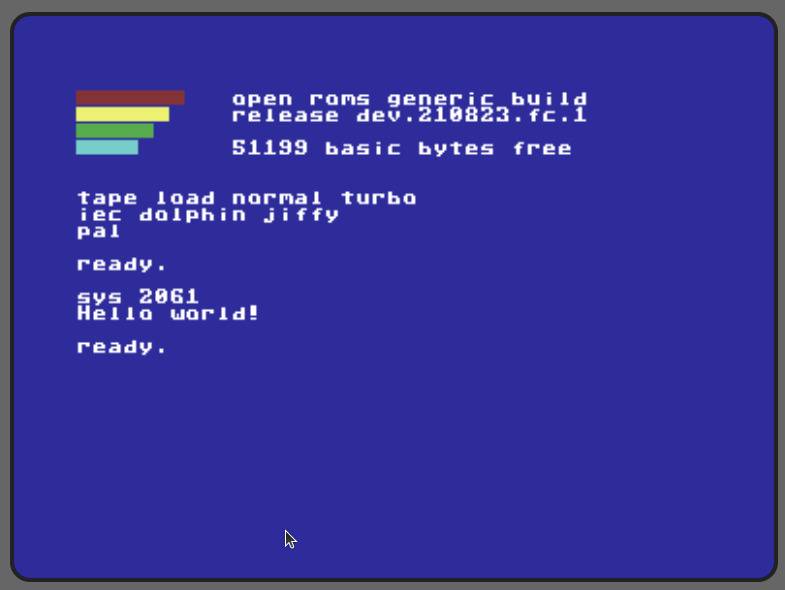

| 6502 Programming in C | Programming some really old hardware in C. |

| Complex Object Collections in C++ | |

| Enumeration Scoping in C++ | |

| GDB and GPROF | Using the Gnu Debugger and Profiler. |

| Programming Arduino (AVR) and Raspberry Pi Pico (ARM) in C |

Mainframe

| Title | Description |

|---|---|

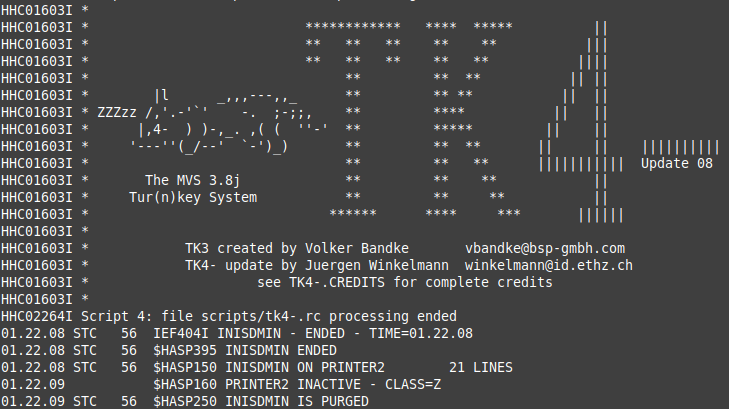

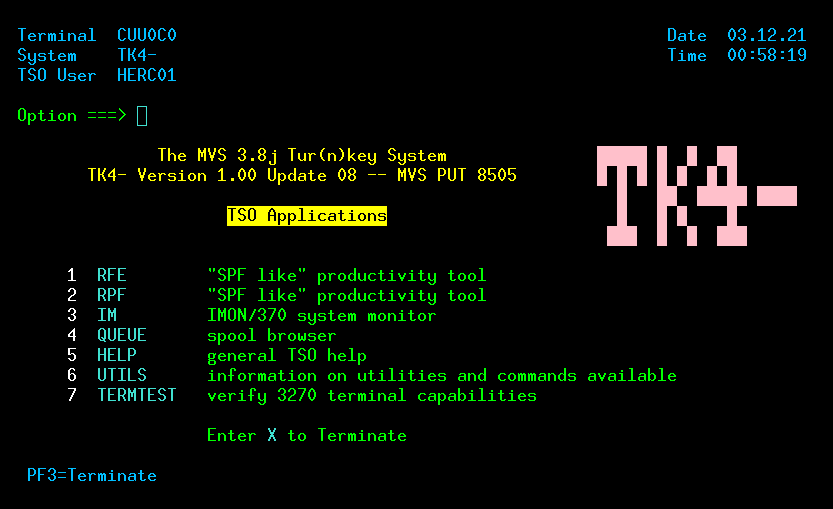

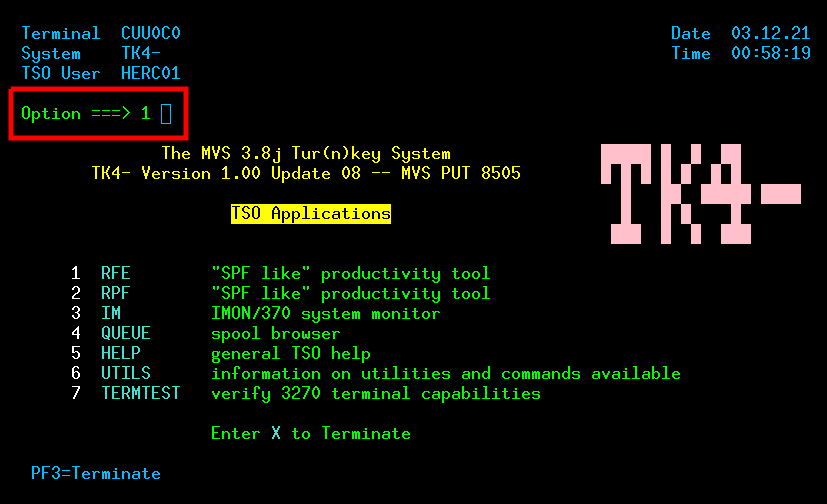

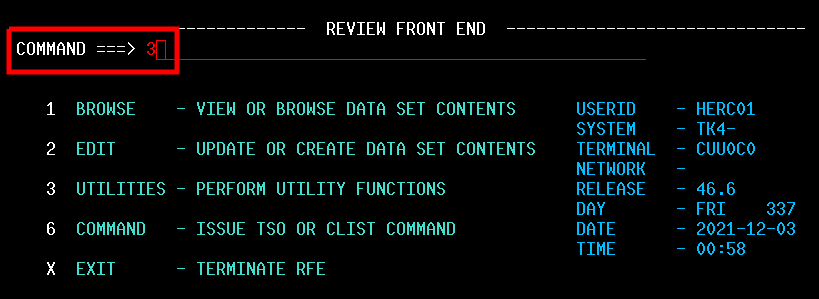

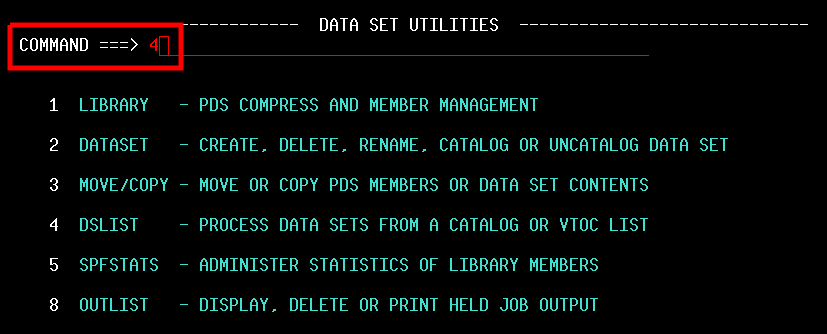

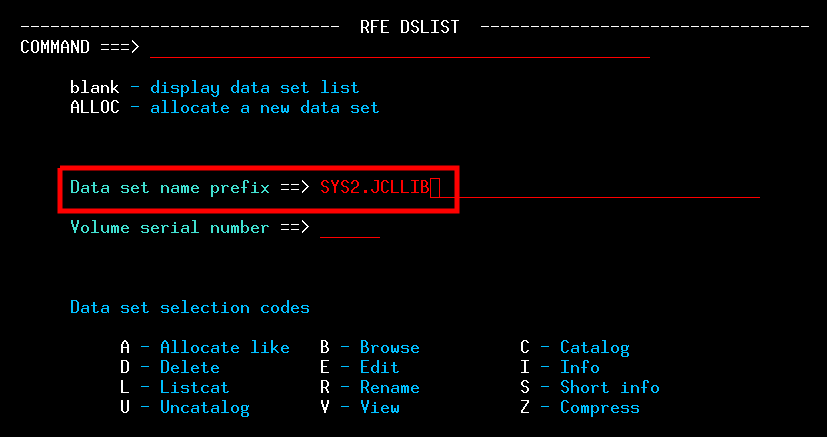

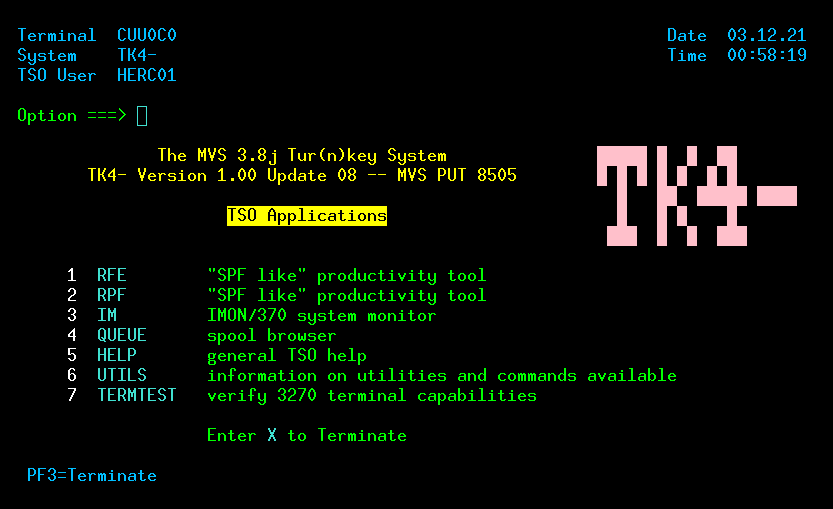

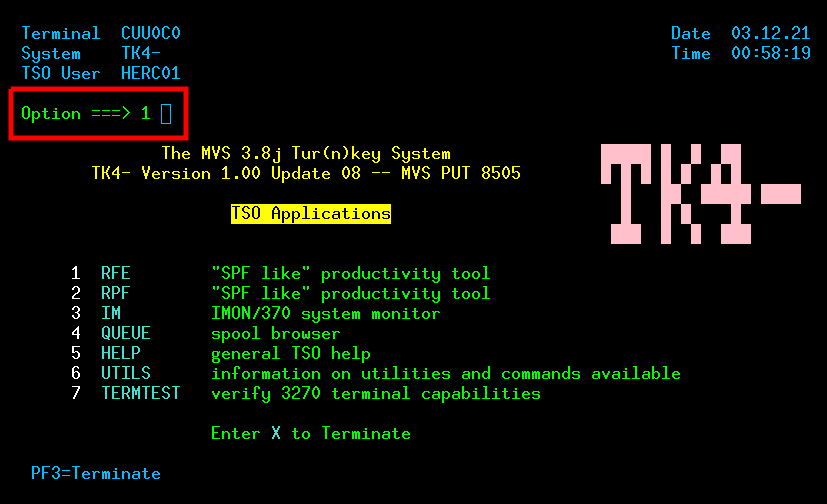

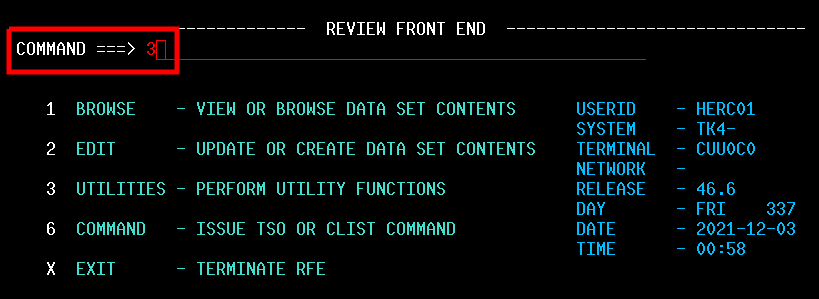

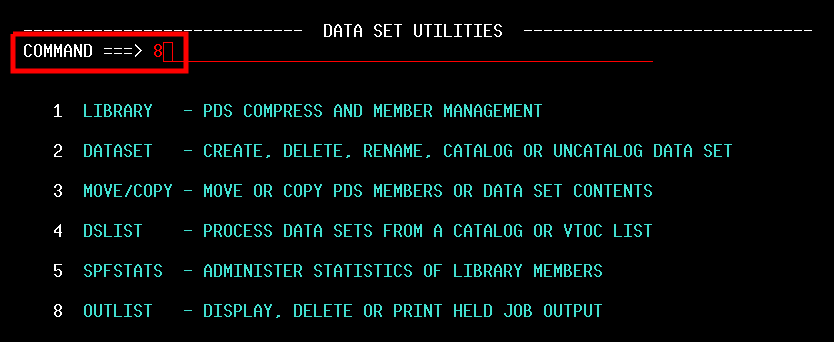

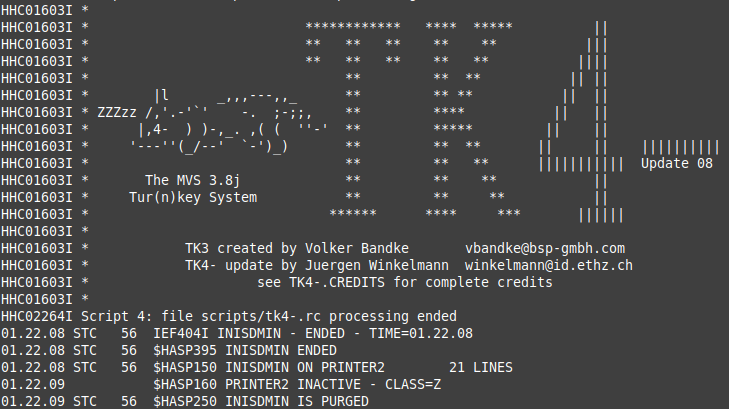

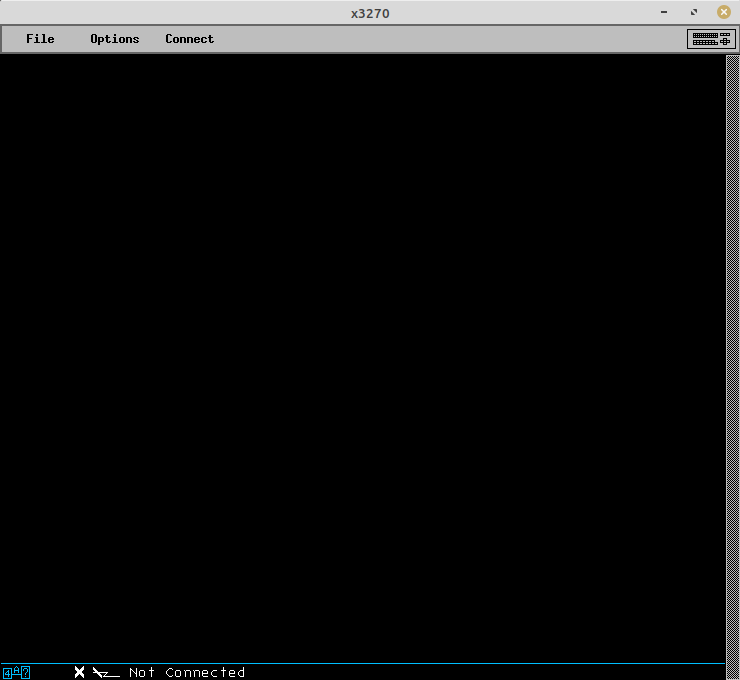

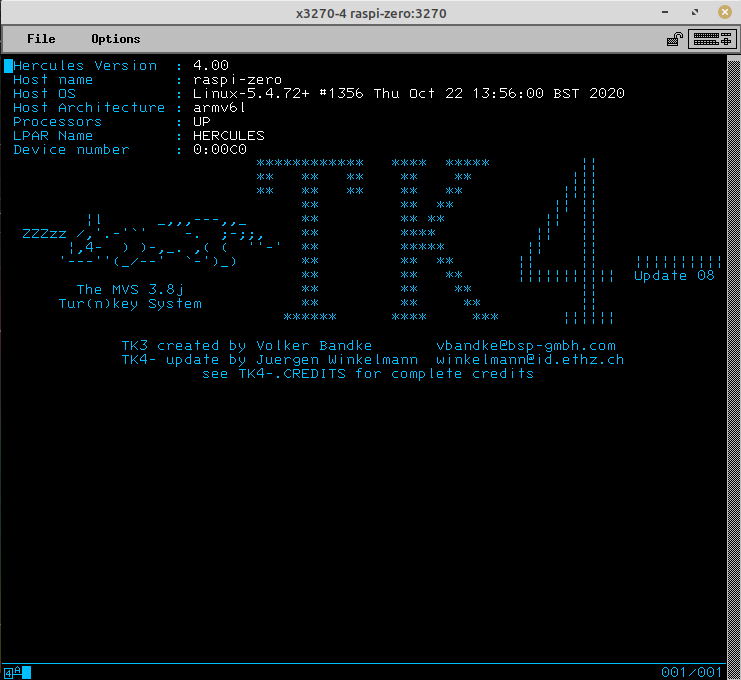

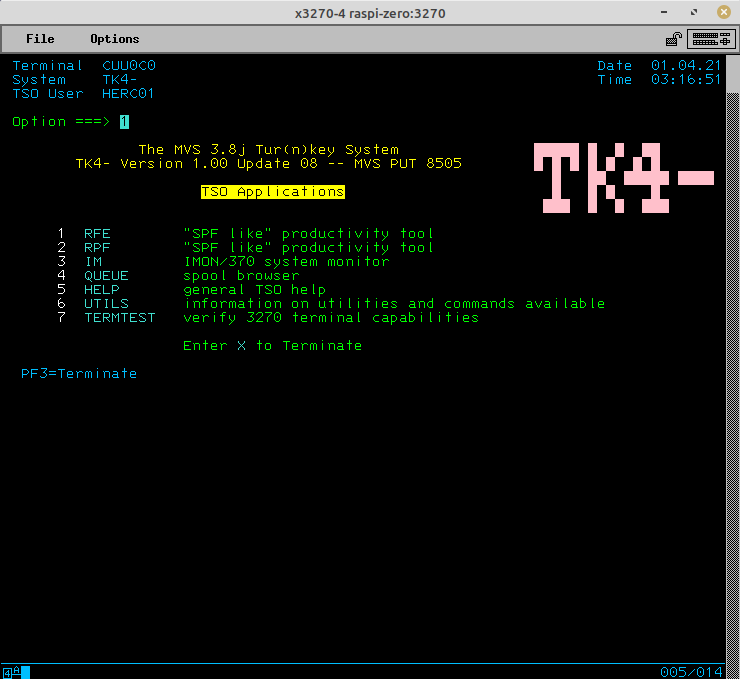

| Mainframe Emulation on Raspberry Pi Zero | Covers setup of the MVS 3.8j Turnkey system, 3270 terminal, logon, and logoff. (Uses Raspberry Pi Zero for the hardware, but the instructions can easily be adapted for other targets.) |

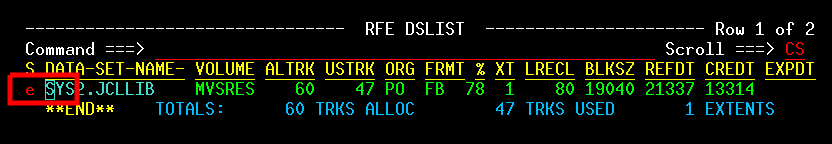

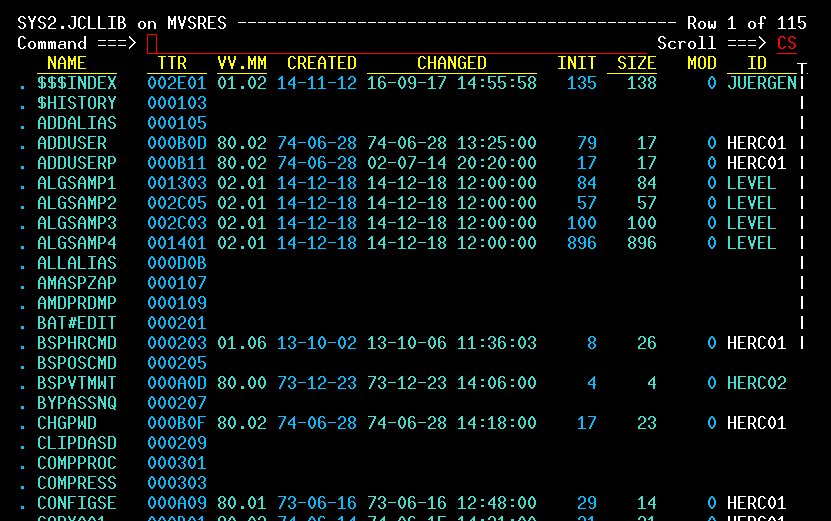

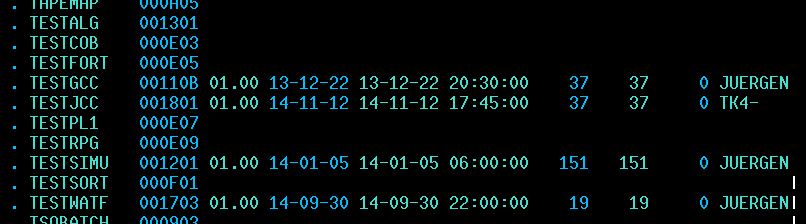

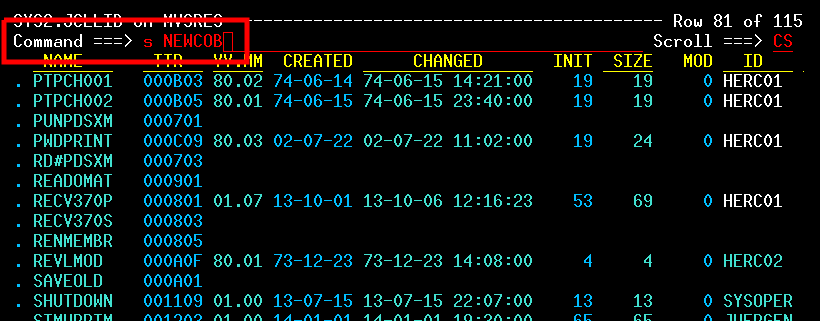

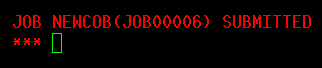

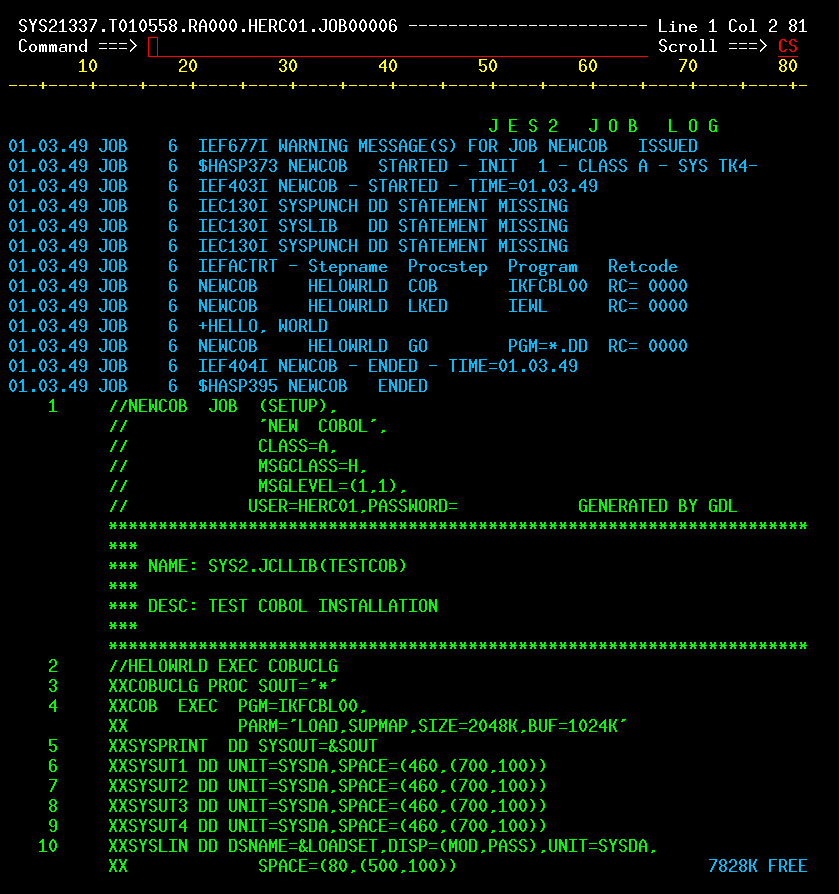

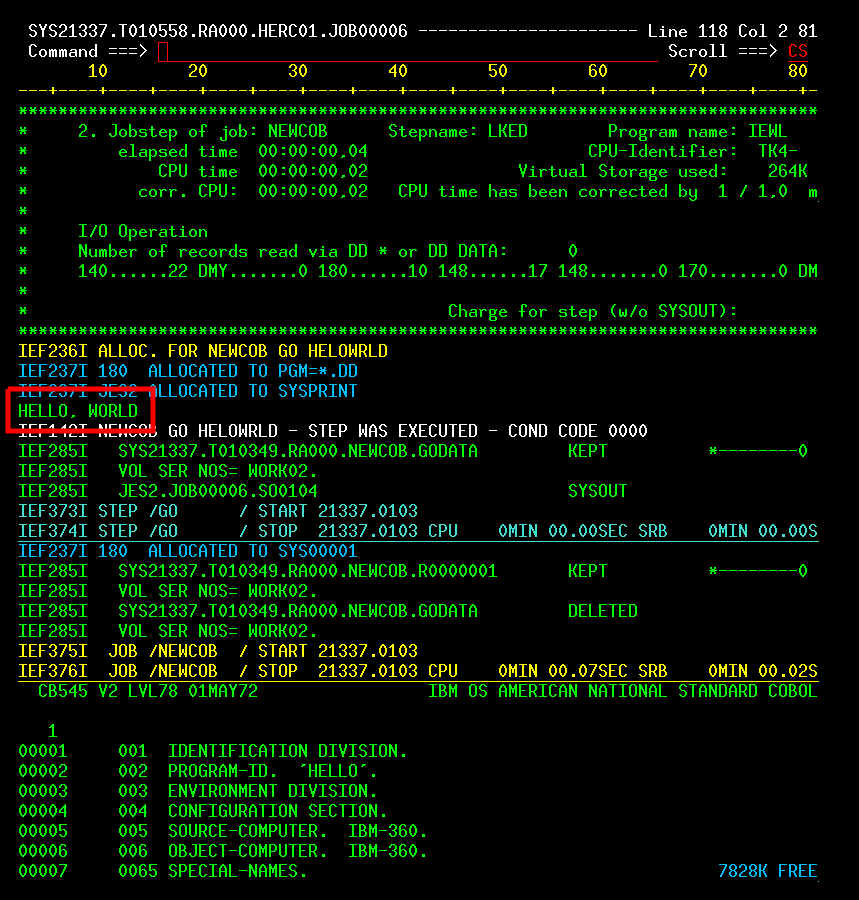

| JCL and Programming On the MVS Turnkey System | Using JCL to run jobs and programming in COBOL, FORTRAN, PL/1, and C on the MVS system. |

| MVS Turnkey in Docker | Set up and run the MVS Turnkey system in a Docker container. |

Terms

- Initial Program Load (IPL)

- Job Control Language (JCL)

- Multiple Virtual Storage (MVS)

- Resource Access Control Facility (RACF)

- Time Sharing Option (TSO)

About

My name is Jim Carr. I’m a software engineer, and I live near Dayton, Ohio.

I started this knowledge base to collect technical how-tos, walkthroughs, etc, that are of interest to me and which I hope will be helpful for others.

.NET

Add Settings File to .NET Console Application

App Settings File

Create appsettings.json file in project root. Example contents:

{

"Settings": {

"Title": "My Application",

"Timeout": 30

}

}

Packages / Project Output

Add the following packages to the project’s .csproj file:

<ItemGroup>

<PackageReference Include="Microsoft.Extensions.Configuration.Binder" Version="7.0.4" />

<PackageReference Include="Microsoft.Extensions.Configuration.EnvironmentVariables" Version="7.0.0" />

<PackageReference Include="Microsoft.Extensions.Configuration.Json" Version="7.0.0" />

</ItemGroup>

Add the following directive to copy the appsettings file with the binary:

<ItemGroup>

<Content Include="appsettings.json">

<CopyToOutputDirectory>Always</CopyToOutputDirectory>

</Content>

</ItemGroup>

Settings Class

Create a class to hold the settings:

public sealed class AppSettings

{

public required string Title { get; set; }

public required int Timeout { get; set; }

}

Initialize Configuration

Initialize the configuration, and retrieve the settings:

IConfiguration config = new ConfigurationBuilder()

.AddJsonFile("appsettings.json")

.AddEnvironmentVariables()

.Build();

AppSettings appSettings = config.GetRequiredSection("Settings").Get<AppSettings>();

Access Settings

Access the settings:

var title = appSettings.Title;

var timeout = appSettings.Timeout;

Alternate Access Method

Tip

If you use this method exclusively, you don’t need the settings class.

var title = config.GetValue<string>("Settings:Title");

var timeout = config.GetValue<int>("Settings:Timeout");

API Key in .NET WebAPI Project

Create a webapi project, e.g.:

dotnet new webapi -o MyMicroservice

Add a middleware class:

public class ApiKeyMiddleware

{

private readonly string _apiKeyName;

private readonly string _apiKeyValue;

private readonly RequestDelegate _next;

public ApiKeyMiddleware(RequestDelegate next, string apiKeyName, string apiKeyValue)

{

_next = next;

_apiKeyName = apiKeyName;

_apiKeyValue = apiKeyValue;

}

public async Task InvokeAsync(HttpContext context)

{

if (!context.Request.Headers.TryGetValue(_apiKeyName, out var extractedApiKey))

{

context.Response.StatusCode = 401;

await context.Response.WriteAsync("API Key was not provided.");

return;

}

if (!_apiKeyValue.Equals(extractedApiKey))

{

context.Response.StatusCode = 403;

await context.Response.WriteAsync("Unauthorized client.");

return;

}

await _next(context);

}

}

Handle the API key check in Program.cs with the following code. Place this before the app.MapControllers() call:

string? apiKeyName = "My-Api-Key-Name";

string? apiKeyValue = "85a80751-cc34-4a6e-9ad9-4d9c9bea403c"; // Do NOT store your actual key value inline!

// Retrieve it from a protected

// location, e.g., an Azure key vault.

// Validate the request:

app.UseMiddleware<ApiKeyMiddleware>(apiKeyName, apiKeyValue);

Calls to the API look like this:

GET https://your_webservice_url/some_endpoint

Accept: application/json

My-Api-Key-Name: 85a80751-cc34-4a6e-9ad9-4d9c9bea403c

Call Async Method from Non-Async Method in C#

When you call an asynchronous method with an await, the method you’re calling from must also be asynchronous. But, sometimes that’s problematic: Perhaps you’re working with existing code, or you’re implementing code in a context where it’s difficult to make it asynchronous.

You can “localize” the management of asynchronous calls using Task.Run(). In the following code, look at the two instances of Task.Run(), Wait(), and Result to see how to do it:

using (HttpContent content = new StringContent(text, Encoding.UTF8, "text/xml"))

using (HttpRequestMessage request = new HttpRequestMessage(HttpMethod.Post, url))

{

request.Headers.Add("SOAPAction", "");

request.Content = content;

var task1 = Task.Run(() => httpClient.SendAsync(request, HttpCompletionOption.ResponseHeadersRead));

task1.Wait();

var response = task1.Result;

var task2 = Task.Run(() => response.Content.ReadAsStreamAsync());

task2.Wait();

var stream = task2.Result;

using var reader = new StreamReader(stream);

return reader.ReadToEnd();

}

Connect To MySQL From .NET

https://dev.mysql.com/doc/connector-net/en/

https://dev.mysql.com/doc/connector-net/en/connector-net-installation-binary-nuget.html

https://www.nuget.org/packages/MySql.Data

dotnet add package MySql.Data --version 8.2.0

https://dev.mysql.com/doc/connector-net/en/connector-net-connections-string.html

https://dev.mysql.com/doc/connector-net/en/connector-net-programming-mysqlcommand.html

Create Code Behind File for Razor Page In Blazor Application

This example will use the Counter.razor page in the default Blazor WebAssembly App project.

Default Counter.razor page contains this:

Counter.razor

@page "/counter"

<PageTitle>Counter</PageTitle>

<h1>Counter</h1>

<p role="status">Current count: @currentCount</p>

<button class="btn btn-primary" @onclick="IncrementCount">Click me</button>

@code {

private int currentCount = 0;

private void IncrementCount()

{

currentCount++;

}

}

In the same directory, create a new class file named Counter.razor.cs with this content:

Counter.razor.cs

namespace BlazorCodeBehind.Pages

{

public partial class Counter

{

}

}

(Change BlazorCodeBehind.Pages to match the namespace for your project)

Copy and paste the contents of the @code block from Counter.razor into the class file so that it looks like this:

Counter.razor.cs

namespace BlazorCodeBehind.Pages

{

public partial class Counter

{

private int currentCount = 0;

private void IncrementCount()

{

currentCount++;

}

}

}

Remove the code block from Counter.razor so that it looks like this:

Counter.razor

@page "/counter"

<PageTitle>Counter</PageTitle>

<h1>Counter</h1>

<p role="status">Current count: @currentCount</p>

<button class="btn btn-primary" @onclick="IncrementCount">Click me</button>

C# Language Versioning

| Target | Version | C# language version default |

|---|---|---|

| .NET | 10.x | C# 14 |

| .NET | 9.x | C# 13 |

| .NET | 8.x | C# 12 |

| .NET | 7.x | C# 11 |

| .NET | 6.x | C# 10 |

| .NET | 5.x | C# 9.0 |

| .NET Core | 3.x | C# 8.0 |

| .NET Core | 2.x | C# 7.3 |

| .NET Standard | 2.1 | C# 8.0 |

| .NET Standard | 2.0 | C# 7.3 |

| .NET Standard | 1.x | C# 7.3 |

| .NET Framework | all | C# 7.3 |

source: https://learn.microsoft.com/en-us/dotnet/csharp/language-reference/configure-language-version

Dependency Injection in .NET/C#

Dependency injection - .NET | Microsoft Learn

Use dependency injection - .NET | Microsoft Learn

Dependency Injection By Hand · ninject/Ninject Wiki

Deploy Blazor WebAssembly to GitHub Pages

Create a Blazor webassembly project, e.g.:

dotnet new blazorwasm -o BlazorWasmTest

Publish:

dotnet publish -c Release -o output

The base tag in output/wwwroot/index.html needs to be updated. Change this tag:

<base href="/" />

To this:

<base href="https://root-of-github-page/" />

For example, if your GitHub username is johndoe, and your repo name is my-cool-repo, then your GitHub page URL tag will be this:

<base href="https://johndoe.github.io/my-cool-repo/" />

If you want to simplify this, e.g., in a Makefile, use these commands in a Makefile target:

dotnet publish -c Release -o output

cd output/wwwroot; sed -i 's|<base href="/" />|<base href="https://johndoe.github.io/my-cool-repo/" />|' index.html

In the repo, enable GitHub pages and add a Static HTML GitHub action as the deployment method. Update the static.yml file for the action as follows: In jobs, deploy, steps, with, path, change path: '.' to path: 'output/wwwroot'.

When you commit, the contents of output/wwwroot will automatically be deployed.

Determine path to web.config at runtime

In C#, you can determine which web.config is being used with this:

String pathToConfig = System.Web.HttpContext.Current.Server.MapPath("/Web.config");

Entity Framework

Model Generation

Instructions are for .NET 7

MySQL

dotnet new console –o applicationName

cd applicationName

dotnet add package MySql.EntityFrameworkCore --version 7.0.2

dotnet add package Microsoft.EntityFrameworkCore.Tools --version 7.0.14

If the Entity Framework tool is not already installed:

dotnet tool install --global dotnet-ef --version 7.*

Then, generate the model files in the models directory:

dotnet ef dbcontext scaffold "server=127.0.0.1;port=3306;uid=jimc;pwd=password;database=database_name" MySql.EntityFrameworkCore -o models -f

SQL Server

dotnet new console –o applicationName

cd applicationName

dotnet add package Microsoft.EntityFrameworkCore.SqlServer --version 7.0.14

dotnet add package Microsoft.EntityFrameworkCore.Tools --version 7.0.14

If the Entity Framework tool is not already installed:

dotnet tool install --global dotnet-ef --version 7.*

Then, generate the model files in the models directory:

dotnet ef dbcontext scaffold "Server=server_ip_address;User Id=sa;Password=password;Database=database_name;Encrypt = No" Microsoft.EntityFrameworkCore.SqlServer -o models -f

More Info

Data Annotations - Column Attribute in EF 6 & EF Core

FluentUI Configuration In Blazor Application

Project File

<ItemGroup>

<PackageReference Include="Microsoft.FluentUI.AspNetCore.Components" Version="4.10.4" />

<PackageReference Include="Microsoft.FluentUI.AspNetCore.Components.Icons" Version="4.10.4" />

</ItemGroup>

Program.cs

using Microsoft.FluentUI.AspNetCore.Components;

builder.Services.AddRazorComponents()

.AddInteractiveServerComponents();

builder.Services.AddFluentUIComponents(); // add this

var app = builder.Build();

_Imports.razor

@using Microsoft.FluentUI.AspNetCore.Components

Links

Get Path For Executable (.NET/C#)

To get the path that a .NET console executable resides in, regardless of where the executable was launched from:

var exePath = Path.GetDirectoryName(Environment.ProcessPath ?? "")

This is useful in a scenario where you want to access a file that resides in the same directory, e.g., a configuration file:

IConfiguration config = new ConfigurationBuilder()

.AddJsonFile(Path.Join(Path.GetDirectoryName(Environment.ProcessPath ?? ""), "my-app-config.json"))

.AddEnvironmentVariables()

.Build();

Install .NET From Microsoft Feed in Ubuntu

Remove Existing Installs

sudo apt remove 'dotnet*' 'aspnet*' 'netstandard*'

Create /etc/apt/preferences, if it doesn’t already exist:

touch /etc/apt/preferences

Open /etc/apt/preferences in an editor and add the following settings, which prevents packages that start with dotnet, aspnetcore, or netstandard from being sourced from the distribution’s repository:

Package: dotnet* aspnet* netstandard*

Pin: origin "<your-package-source>"

Pin-Priority: -10

Replace <your-package-source> with your distribution’s package source. You can determine what it is with this:

apt-cache policy '~ndotnet.*' | grep -v microsoft | grep '/ubuntu' | grep updates | cut -d"/" -f3 | sort -u

Reinstall .NET From the Microsoft Package Feed

Open a terminal and run the following commands:

# Get Ubuntu version

declare repo_version=$(if command -v lsb_release &> /dev/null; then lsb_release -r -s; else grep -oP '(?<=^VERSION_ID=).+' /etc/os-release | tr -d '"'; fi)

# Download Microsoft signing key and repository

wget https://packages.microsoft.com/config/ubuntu/$repo_version/packages-microsoft-prod.deb -O packages-microsoft-prod.deb

# Install Microsoft signing key and repository

sudo dpkg -i packages-microsoft-prod.deb

# Clean up

rm packages-microsoft-prod.deb

# Update packages

sudo apt update

Install the SDK you want, e.g., 8.0:

sudo apt install dotnet-sdk-8.0

Manual Installation of .NET SDK in Linux

If you’ve already installed one or more .NET SDK versions via the package manager, uninstall them before proceeding.

Download the SDK versions you want to install from here. For this example, I’ll be installing .NET 8 and .NET 10, downloaded as dotnet-sdk-8.0.416-linux-x64.tar.gz and dotnet-sdk-10.0.100-linux-x64.tar.gz, respectively.

Open a terminal.

If ~/.dotnet already exists (from a previous installation), remove it:

rm -rf ~/.dotnet

Create a new, empty .dotnet directory:

mkdir ~/.dotnet

Extract the SDK archive(s) into the .dotnet directory:

tar zxf dotnet-sdk-8.0.416-linux-x64.tar.gz -C ~/.dotnet/

tar zxf dotnet-sdk-10.0.100-linux-x64.tar.gz -C ~/.dotnet/

Add to .profile:

export DOTNET_ROOT=$HOME/.dotnet

export PATH=$PATH:$DOTNET_ROOT:$DOTNET_ROOT/tools

Restart to apply the new .profile settings.

Open a new terminal and verify that the dotnet CLI is working correctly:

dotnet --list-sdks

You should see something like this:

8.0.416 [/home/username/.dotnet/sdk]

10.0.100 [/home/username/.dotnet/sdk]

Taken from here: https://learn.microsoft.com/en-us/dotnet/core/install/linux-scripted-manual#manual-install

Microservice Notes

Microservices architecture design

Tutorial: Create a web API with ASP.NET Core

Creating POST method in Web API

No Frameworks Were Found error

Error when executing dotnet cli in Linux:

No frameworks were found

To fix this, first remove the existing dotnet installation:

sudo apt remove 'dotnet*'

sudo apt remove 'aspnetcore*'

sudo apt remove 'netstandard*'

Then, reinstall each SDK:

sudo apt install dotnet-sdk-6.0

sudo apt install dotnet-sdk-7.0

Package and Publish To NuGet

Create a NuGet package with the dotnet CLI

Full article: https://learn.microsoft.com/en-us/nuget/create-packages/creating-a-package-dotnet-cli

Set Properties

Set (at least) the following properties in the .csproj file:

<Project Sdk="Microsoft.NET.Sdk">

<PropertyGroup>

<TargetFramework>netstandard2.0</TargetFramework>

<PackageId>UniqueID</PackageId>

<Version>1.0.0</Version>

<Authors>Author Name</Authors>

<Company>Company Name</Company>

<Product>Product Name</Product>

</PropertyGroup>

</Project>

PackageId will be used as the identifier on nuget.org. For example, if you give PackageId the value MyFancyLib, then the published nuget will be at https://www.nuget.org/packages/MyFancyLib.

PackageId must be unique, and not used by anyone else previously. Moreover, each time you publish an update the PackageId / Version combination must be unique. (You cannot overwrite a previously published version.)

Run the pack command

To build the NuGet package or .nupkg file, run the dotnet pack command from the project folder, which also builds the project automatically.

dotnet pack

The output shows the path to the .nupkg file:

MSBuild version 17.3.0+92e077650 for .NET

Determining projects to restore...

Restored C:\projects\MyFancyLib\MyFancyLib.csproj (in 97 ms).

Successfully created package 'C:\MyFancyLib\bin\Debug\MyFancyLib.1.0.0.nupkg'.

Publish NuGet packages

Full article: https://learn.microsoft.com/en-us/nuget/nuget-org/publish-a-package

To publish on nuget.org, sign in to nuget.org with a Microsoft account, and use it to create a free nuget.org account.

Create an API key

- Sign into your nuget.org account or create an account if you don’t have one already.

- Select your user name at upper right, and then select API Keys.

- Select Create, and provide a name for your key.

- Under Select Scopes, select Push.

- Under Select Packages > Glob Pattern, enter *.

- Select Create.

- Select Copy to copy the new key.

Use the dotnet CLI to publish

From the folder that contains the .nupkg file, run the following command. Specify your .nupkg filename, and replace the key value with your API key.

dotnet nuget push MyFancyLib.1.0.0.nupkg --api-key qz2jga8pl3dvn2akksyquwcs9ygggg4exypy3bhxy6w6x6 --source https://api.nuget.org/v3/index.json

The output shows the results of the publishing process:

Pushing MyFancyLib.1.0.0.nupkg to 'https://www.nuget.org/api/v2/package'...

PUT https://www.nuget.org/api/v2/package/

warn : All published packages should have license information specified. Learn more: https://aka.ms/nuget/authoring-best-practices#licensing.

Created https://www.nuget.org/api/v2/package/ 1221ms

Your package was pushed.

Remove Duplicates From List Collection

A couple of examples of removing duplicate entries in list collections in C#.

Implemented As a Method

using System;

using System.Collections.Generic;

using System.Collections.ObjectModel;

using System.Linq;

namespace Console1

{

class Program

{

static IEnumerable<T> RemoveDuplicates<T>(List<T> inputList)

{

inputList.Sort();

return inputList.Distinct();

}

static void Main(string[] args)

{

var myList = new List<string> {

"John",

"Andrew",

"James",

"Jack",

"Andrew",

"Bob",

"Jack"

};

var newList = RemoveDuplicates(myList);

foreach (var item in newList)

{

Console.WriteLine(item);

}

}

}

}

Implemented As An Extension

using System;

using System.Collections.Generic;

using System.Collections.ObjectModel;

using System.Linq;

namespace Console1

{

public static class ListExtension

{

public static IEnumerable<T> RemoveDuplicates<T>(this List<T> inputList)

{

inputList.Sort();

return inputList.Distinct();

}

}

class Program

{

static void Main(string[] args)

{

var myList = new List<string> {

"John",

"Andrew",

"James",

"Jack",

"Andrew",

"Bob",

"Jack"

};

var newList = myList.RemoveDuplicates();

foreach (var item in newList)

{

Console.WriteLine(item);

}

}

}

}

Side-by-Side .NET Core Installations

Setup

This describes a setup where .NET Core 3.1 was installed first, then .NET Core 2.2 was installed later.

Since .NET Core 3.1 is the newest version, issuing a command like this:

dotnet new console -o MyConsoleApp

…creates a console application that targets v3.1. If you look at the .csproj file, you’ll see this:

<TargetFramework>netcoreapp3.1</TargetFramework>

Project Level

There are a couple of ways to target v2.2. Different templates support different methods.

For a console app, simply edit the .csproj to reflect the version you want:

<TargetFramework>netcoreapp2.2</TargetFramework>

…and then restore:

dotnet restore

Some templates, like webapi and mvc, allow you to target an alternate version when you create the project:

dotnet new webapi -f netcoreapp2.2 -o MyWebService

Framework Level

You can also control the default framework used by the CLI. We’ll continue using our example described above, where .NET Core 3.1 was installed first, then .NET Core 2.2 was installed later.

Since 2.2 was installed last, that makes it the default SDK:

dotnet --version

Result:

2.2.108

To change the default SDK version, first list your installed versions, and note the version you want to use as the default:

dotnet --list-sdks

Result:

2.2.108

3.1.301

(We’ll change our default to 3.1.301)

Create a global.json file. The setting in the global.json file will affect the dotnet cli when it’s invoked in any subdirectory under the location of the global.json file, so you’ll probably want to create it in a location like $HOME (for Linux), or C:\ (for Windows):

dotnet new globaljson

Then, edit the new global.json file, and update the “version” value to reflect the version you wish to be the default:

{

"sdk": {

"version": "3.1.301"

}

}

Then, the version reported by the CLI will match:

dotnet --version

Result:

3.1.301

More Information

How to install multiple versions of .NET Core side by side on ubuntu - Stack Overflow

Switching between .NET Core SDK Versions

Single-File / Multi-OS / Multi-Architecture Publishing in .NET

Walkthrough

Assumes .NET 5 or 6. Earlier versions of .NET Core support single file publishing as well, but it’s markedly improved in v5 and v6.

Single file publishing is controlled by three arguments to the dotnet publish command:

| Argument | Description |

|---|---|

| -r | Runtime Identifier. Specifies which operating system, operating system version, and architecture to target. |

| –self-contained true | Include all dependencies. This removes the need to have the associated .NET runtime installed on the target machine. |

| /p:PublishSingleFile=true | Bundle the output into a single file. |

For example, the command for publishing to a single file, targeting Windows 10 (64-bit), looks like this:

dotnet publish -c Release -r win10-x64 --self-contained true -p:PublishSingleFile=true

Targeting other OS/architecture combinations is as simple as replacing the RID value in the command with the RID you want to target.

I’ve tested the following RIDs:

| RID | Operating System | Architecture |

|---|---|---|

| linux-x64 | Linux | 64-bit AMD |

| linux-arm | Linux | 32-bit ARM (This can be used to target Raspberry Pi) |

| osx.10.14-x64 | macOS 10.14 Mojave | 64-bit ARM |

| win10-x64 | Windows 10 | 64-bit AMD |

You can find more information about RIDs in the .NET Core RID Catalog article.

Results for .NET 5

Technically speaking, the publish command does not produce a “single file” in all cases. Starting with a simple console project named “SingleFilePublish”, the following outputs are produced:

Linux

One executable:

SingleFilePublish

Windows

5 files, including the executable:

clrcompression.dll

clrjit.dll

coreclr.dll

mscordaccore.dll

SingleFilePublish.exe

macOS

8 files, including the executable:

libclrjit.dylib

libcoreclr.dylib

libSystem.IO.Compression.Native.dylib

libSystem.Native.dylib

libSystem.Net.Security.Native.dylib

libSystem.Security.Cryptography.Native.Apple.dylib

libSystem.Security.Cryptography.Native.OpenSsl.dylib

SingleFilePublish

Raspberry Pi (32-bit ARM)

One executable:

SingleFilePublish

Results for .NET 6

With .NET 6, it looks like true single-file publishing has been achieved.

Linux

One executable:

SingleFilePublish

Windows

One executable:

SingleFilePublish.exe

macOS

One executable:

SingleFilePublish

Raspberry Pi (32-bit ARM)

One executable:

SingleFilePublish

Deployment

When you run the dotnet publish command, outputs are written to the bin/Release/net5.0/publish directory. To deploy, simply copy the contents of this directory to your target machine.

The publish directory includes a .pdb file, but it’s not required. It just contains debugging information.

Sample Makefile

Simplifies the steps described above.

LINUX_RID = linux-x64

LINUX_RID_ARM = linux-arm

MAC_RID = osx.10.14-x64

WINDOWS_RID = win10-x64

CONFIGURATION_ARGS = Release

PUBLISH_ARGS = --self-contained true /p:PublishSingleFile=true

default:

@echo 'Targets:'

@echo ' run'

@echo ' run-win'

@echo ' copy-pi'

@echo ' publish'

@echo ' publish-win'

@echo ' publish-mac'

@echo ' publish-arm'

@echo ' clean'

run: publish

./bin/Release/net5.0/linux-x64/publish/SingleFilePublish

run-win: publish-win

wine ./bin/Release/net5.0/win10-x64/publish/SingleFilePublish

copy-pi: publish-arm

scp ./bin/Release/net5.0/linux-arm/publish/SingleFilePublish pi@raspi4-main:/home/pi

publish:

dotnet publish -c $(CONFIGURATION_ARGS) -r $(LINUX_RID) $(PUBLISH_ARGS)

publish-win:

dotnet publish -c $(CONFIGURATION_ARGS) -r $(WINDOWS_RID) $(PUBLISH_ARGS)

publish-arm:

dotnet publish -c $(CONFIGURATION_ARGS) -r $(LINUX_RID_ARM) $(PUBLISH_ARGS)

publish-mac:

dotnet publish -c $(CONFIGURATION_ARGS) -r $(MAC_RID) $(PUBLISH_ARGS)

clean:

-rm -rf bin/

-rm -rf obj/

Supporting Material for ‘.NET and Linux’ tech talk

Code Snippets

Unguarded Code

var registryValue =

Registry.GetValue("HKEY_CURRENT_USER", "value", "blarg");

Console.WriteLine(registryValue);

This code will raise a type initializer exception if run on a non-Windows system.

It will also generate a compile-time warning: “warning CA1416: This call site is reachable on all platforms. ‘Registry.GetValue(string, string?, object?)’ is only supported on: ‘windows’”

Guarded Code

var registryValue = (OperatingSystem.IsWindows())

? Registry.GetValue("HKEY_CURRENT_USER", "value", "blarg")

: $"Registry does not exist in {Environment.OSVersion}";

Console.WriteLine(registryValue);

This code will run successfully on all platforms. It will not generate a compile-time warning, as the compiler will see that the code is guarded.

Simple IoT Example

This is a simple code example for blinking an LED on a breakout board attached to a Raspberry Pi.

using System;

using System.Device.Gpio;

using System.Threading;

Console.WriteLine("Blinking LED. Press Ctrl+C to end.");

int pin = 18;

using var controller = new GpioController();

controller.OpenPin(pin, PinMode.Output);

bool ledOn = true;

while (true)

{

controller.Write(pin, ((ledOn) ? PinValue.High : PinValue.Low));

Thread.Sleep(1000);

ledOn = !ledOn;

}

Full example is here.

Links

Download .NET - Downloads for .NET, including ASP.NET Core.

Install .NET on Linux Distributions

.NET Runtime Identifier (RID) catalog

.NET IoT - Develop apps for IoT devices with the .NET IoT Libraries.

Writing cross platform P/Invoke code

Language Comparison - Go

go.dev - Go home page

Go by Example - Annotated example programs.

Go GOOS and GOARCH - Platform targeting values.

Language Comparison - Rust

Rust Programming Language - Rust home page

Rust by Example - Collection of runnable example programs illustrating various Rust concepts.

Rust Cookbook - Collection of simple examples that demonstrate good practices to accomplish common programming tasks.

TUI Frameworks for .NET

Terminal.Gui

Terminal.Gui - Cross Platform Terminal UI toolkit for .NET

Spectre.Console

WCF Export

Given the URL of a WCF service (along with a output prefix and target namespace), this Python script generates C# proxy source and config files. These files can then be imported/copied to a .NET project and used directly to consume the web service.

import os

import string

import subprocess

import sys

class WCFExporter:

def __init__(self, serviceURL, outputPrefix, targetNamespace):

self.serviceURL = serviceURL

self.outputPrefix = outputPrefix

self.targetNamespace = targetNamespace

self.svcUtilCmd = 'C:\\PROGRA~1\\MI2578~1\\Windows\\v6.0A\\Bin\\SvcUtil.exe'

def DropGeneratedCodeAttribute(self):

try:

mySourceFile = self.outputPrefix + '.temp.cs'

myTargetFile = self.outputPrefix + '.cs'

mySourceHandle = open(mySourceFile, 'r')

myTargetHandle = open(myTargetFile, 'w')

for inputLine in mySourceHandle:

if 'GeneratedCodeAttribute' not in inputLine:

myTargetHandle.write(inputLine)

myTargetHandle.close()

mySourceHandle.close()

os.remove(mySourceFile)

except Exception as ex:

print '[ERROR] ' + str(ex)

def GenerateFiles(self):

try:

retcode = -1

myCommandString = self.svcUtilCmd + ' /t:code ' + self.serviceURL + ' /out:' + self.outputPrefix + '.temp.cs /config:' + self.outputPrefix + '.config /namespace:*,' + self.targetNamespace

retcode = subprocess.call(myCommandString)

except Exception as ex:

print '[ERROR] ' + str(ex)

return retcode

def UsageMessage(self):

print '\nUSAGE: wcfexport.py <wcf service url> <output prefix> <target namespace>'

print '\n\n\tEXAMPLE: wcfexport.py http://CoolService:9030 MyNewProxy MyCompany.MyPkg'

print '\n\n\tOUTPUT: MyNewProxy.cs and MyNewProxy.config'

### MAIN starts here ###

myWCFExporter = WCFExporter('','','')

if len(sys.argv) != 4:

myWCFExporter.UsageMessage()

sys.exit(1)

myWCFExporter.serviceURL = sys.argv[1]

myWCFExporter.outputPrefix = sys.argv[2]

myWCFExporter.targetNamespace = sys.argv[3]

print '\n'

retcode = myWCFExporter.GenerateFiles()

if retcode == 0:

myWCFExporter.DropGeneratedCodeAttribute()

Web API with ASP.NET

Tutorial: Create a web API with ASP.NET Core – Microsoft Learn

Call a Web API From a .NET Client (C#) - ASP.NET 4.x – Microsoft Learn

Create a web API with ASP.NET Core controllers - Training – Microsoft Learn

json - HttpClient not supporting PostAsJsonAsync method C# - Stack Overflow

C / C++

Complex Object Collections in C++

C++ Example

In C++, vectors act as dynamic arrays, with the ability to resize themselves as elements are inserted or deleted.

To make them available:

#include <vector>

Most vector examples show simple data elements, e.g., to create a vector of int values:

// Initialize

vector<int> numberList;

// Add new elements to the vector:

vector.push_back(1);

vector.push_back(2);

vector.push_back(3);

But, how to add complex objects, when you need something like a dataset? It’s actually pretty straightforward. This example will use planetary data.

First, create a class to hold an individual data element:

class PlanetData {

public:

string planetName;

double tp_PeriodOrbit;

double long_LongitudeEpoch;

double peri_LongitudePerihelion;

double ecc_EccentricityOrbit;

double axis_AxisOrbit;

double incl_OrbitalInclination;

double node_LongitudeAscendingNode;

double theta0_AngularDiameter;

double v0_VisualMagnitude;

PlanetData() {}

PlanetData(string planetName, double tp_PeriodOrbit,

double long_LongitudeEpoch, double peri_LongitudePerihelion,

double ecc_EccentricityOrbit, double axis_AxisOrbit,

double incl_OrbitalInclination, double node_LongitudeAscendingNode,

double theta0_AngularDiameter, double v0_VisualMagnitude) {

this->planetName = planetName;

this->tp_PeriodOrbit = tp_PeriodOrbit;

this->long_LongitudeEpoch = long_LongitudeEpoch;

this->peri_LongitudePerihelion = peri_LongitudePerihelion;

this->ecc_EccentricityOrbit = ecc_EccentricityOrbit;

this->axis_AxisOrbit = axis_AxisOrbit;

this->incl_OrbitalInclination = incl_OrbitalInclination;

this->node_LongitudeAscendingNode = node_LongitudeAscendingNode;

this->theta0_AngularDiameter = theta0_AngularDiameter;

this->v0_VisualMagnitude = v0_VisualMagnitude;

}

};

Create a vector object, using the class as the vector type:

vector<PlanetData> planetData;

Add elements to the vector using instances of the class:

planetData.push_back(PlanetData("Mercury", 0.24085, 75.5671, 77.612, 0.205627, 0.387098, 7.0051, 48.449, 6.74, -0.42));

Then, you can loop through the vector, looking for an individual element:

// This example assumes it's being called inside a

// function, and returning an instance of the

// found element.

for (int i = 0; i < planetData.size(); i++)

if (planetData[i].planetName == planetName)

return planetData[i];

Complete Example

example.cpp

#include <iostream>

#include <vector>

using namespace std;

class PlanetData {

public:

string planetName;

double tp_PeriodOrbit;

double long_LongitudeEpoch;

double peri_LongitudePerihelion;

double ecc_EccentricityOrbit;

double axis_AxisOrbit;

double incl_OrbitalInclination;

double node_LongitudeAscendingNode;

double theta0_AngularDiameter;

double v0_VisualMagnitude;

PlanetData() {}

PlanetData(string planetName, double tp_PeriodOrbit,

double long_LongitudeEpoch, double peri_LongitudePerihelion,

double ecc_EccentricityOrbit, double axis_AxisOrbit,

double incl_OrbitalInclination, double node_LongitudeAscendingNode,

double theta0_AngularDiameter, double v0_VisualMagnitude) {

this->planetName = planetName;

this->tp_PeriodOrbit = tp_PeriodOrbit;

this->long_LongitudeEpoch = long_LongitudeEpoch;

this->peri_LongitudePerihelion = peri_LongitudePerihelion;

this->ecc_EccentricityOrbit = ecc_EccentricityOrbit;

this->axis_AxisOrbit = axis_AxisOrbit;

this->incl_OrbitalInclination = incl_OrbitalInclination;

this->node_LongitudeAscendingNode = node_LongitudeAscendingNode;

this->theta0_AngularDiameter = theta0_AngularDiameter;

this->v0_VisualMagnitude = v0_VisualMagnitude;

}

};

PlanetData planetLookup(string planetName) {

vector<PlanetData> planetData;

planetData.push_back(PlanetData("Mercury", 0.24085, 75.5671, 77.612, 0.205627, 0.387098, 7.0051, 48.449, 6.74, -0.42));

planetData.push_back(PlanetData("Venus", 0.615207, 272.30044, 131.54, 0.006812, 0.723329, 3.3947, 76.769, 16.92, -4.4));

planetData.push_back(PlanetData("Earth", 0.999996, 99.556772, 103.2055, 0.016671, 0.999985, -99.0, -99.0, -99.0, -99.0));

planetData.push_back(PlanetData("Mars", 1.880765, 109.09646, 336.217, 0.093348, 1.523689, 1.8497, 49.632, 9.36, -1.52));

planetData.push_back(PlanetData("Jupiter", 11.857911, 337.917132, 14.6633, 0.048907, 5.20278, 1.3035, 100.595, 196.74, -9.4));

planetData.push_back(PlanetData("Saturn", 29.310579, 172.398316, 89.567, 0.053853, 9.51134, 2.4873, 113.752, 165.6, -8.88));

planetData.push_back(PlanetData("Uranus", 84.039492, 356.135400, 172.884833, 0.046321, 19.21814, 0.773059, 73.926961, 65.8, -7.19));

planetData.push_back(PlanetData("Neptune", 165.845392, 326.895127, 23.07, 0.010483, 30.1985, 1.7673, 131.879, 62.2, -6.87));

for (int i = 0; i < planetData.size(); i++)

if (planetData[i].planetName == planetName)

return planetData[i];

return PlanetData("NoMatch", 0, 0, 0, 0, 0, 0, 0, 0, 0);

}

void printDetails(PlanetData planetDetails) {

cout << planetDetails.planetName << " " << planetDetails.tp_PeriodOrbit << " "

<< planetDetails.long_LongitudeEpoch << " "

<< planetDetails.peri_LongitudePerihelion << " "

<< planetDetails.ecc_EccentricityOrbit << " "

<< planetDetails.axis_AxisOrbit << " "

<< planetDetails.incl_OrbitalInclination << " "

<< planetDetails.node_LongitudeAscendingNode << " "

<< planetDetails.theta0_AngularDiameter << " "

<< planetDetails.v0_VisualMagnitude << endl;

}

int main() {

printDetails(planetLookup("Mercury"));

printDetails(planetLookup("Venus"));

printDetails(planetLookup("Earth"));

printDetails(planetLookup("Mars"));

printDetails(planetLookup("Jupiter"));

printDetails(planetLookup("Saturn"));

printDetails(planetLookup("Uranus"));

printDetails(planetLookup("Neptune"));

printDetails(planetLookup("Pluto")); // won't be found -> not in the dataset

return (0);

}

Enumeration Scoping in C++

The Problem

I spent way too much time trying to figure out what was wrong with this code:

#include <iostream>

using namespace std;

enum enum_1 { ok, warning, error };

enum enum_2 { ok, warning, error };

int main() {

enum_1 my_value1 = enum_1::ok;

enum_2 my_value2 = enum_2::error;

if (my_value1 == enum_1::ok) {

cout << "my_value1 is OK!" << endl;

} else {

cout << "my_value1 is not OK!" << endl;

}

if (my_value2 == enum_2::ok) {

cout << "my_value2 is OK!" << endl;

} else {

cout << "my_value2 is not OK!" << endl;

}

return (0);

}

Trying to build this code produces the following errors:

main.cpp:9:15: error: redefinition of enumerator 'ok'

enum enum_2 { ok, warning, error };

^

main.cpp:6:15: note: previous definition is here

enum enum_1 { ok, warning, error };

^

main.cpp:9:19: error: redefinition of enumerator 'warning'

enum enum_2 { ok, warning, error };

^

main.cpp:6:19: note: previous definition is here

enum enum_1 { ok, warning, error };

^

main.cpp:9:28: error: redefinition of enumerator 'error'

enum enum_2 { ok, warning, error };

^

main.cpp:6:28: note: previous definition is here

enum enum_1 { ok, warning, error };

^

main.cpp:13:30: error: no member named 'error' in 'enum_2'

enum_2 my_value2 = enum_2::error;

~~~~~~~~^

main.cpp:21:20: error: no member named 'ok' in 'enum_2'; did you mean simply 'ok'?

if (my_value2 == enum_2::ok) {

^~~~~~~~~~

ok

main.cpp:6:15: note: 'ok' declared here

enum enum_1 { ok, warning, error };

^

A quick search of StackOverflow, and I learned that “old style” enumerations in C++ are unscoped. Since the individual members in enums are global, the member names have to be unique.

Solution 1 (C++11)

If your compiler supports the C++11 standard, the fix is easy. Just add “class” to your enum declarations:

#include <iostream>

using namespace std;

enum class enum_1 { ok, warning, error };

enum class enum_2 { ok, warning, error };

int main() {

enum_1 my_value1 = enum_1::ok;

enum_2 my_value2 = enum_2::error;

if (my_value1 == enum_1::ok) {

cout << "my_value1 is OK!" << endl;

} else {

cout << "my_value1 is not OK!" << endl;

}

if (my_value2 == enum_2::ok) {

cout << "my_value2 is OK!" << endl;

} else {

cout << "my_value2 is not OK!" << endl;

}

return (0);

}

Solution 2

Alternatively, you can wrap your enums in namespaces:

namespace scope1 {

enum enum_1 { ok, warning, error };

}

namespace scope2 {

enum enum_2 { ok, warning, error };

}

Then, you can access the members as scope1::enum_1::ok, scope2::enum_2::warning, etc.

GDB and GPROF

GPROF Tutorial – How to use Linux GNU GCC Profiling Tool

How to Debug C Program using gdb in 6 Simple Steps

Learning C with gdb - Blog - Recurse Center

stdio.h: No such file or directory

As a part of testing out Linux Mint XFCE 16, I created a simple “hello world” in C:

#include <stdio.h>

main()

{

printf ("Hello, world!\n");

}

But, when I tried to compile it:

gcc hello.c

I got this:

stdio.h: No such file or directory

It seems that there are a few libraries that are not installed by default. It’s easy to add them, though:

sudo apt-get install build-essential

After doing this, the code compiles.

Database / SQL

Boilerplate Date Definitions for SQL

DECLARE @today DATETIME = CAST('7/21/2014' AS DATETIME)

DECLARE @firstDayCurrMnth DATETIME =

Dateadd(dd,CASE

WHEN DAY(@today) > 1 THEN ( ( DAY(@today) - 1 ) * -1 )

ELSE 0

END, @today)

DECLARE @firstDayPrevMnth DATETIME = Dateadd(mm, -1, @firstDayCurrMnth)

DECLARE @firstDayNextMnth DATETIME = Dateadd(mm, 1, @firstDayCurrMnth)

DECLARE @lastDayCurrMnth DATETIME = Dateadd(dd, -1, @firstDayNextMnth)

DECLARE @lastDayNextMnth DATETIME = Dateadd(dd, -1, @firstDayNextMnth)

DECLARE @lastDayPrevMnth DATETIME = Dateadd(dd, -1, @firstDayCurrMnth)

Conditions on aggregates in SQL

You cannot apply a where condition to an aggregate in SQL. For example, this does not work:

SELECT CustID, SUM(OrderAmt)

FROM Orders

GROUP BY CustID

WHERE SUM(OrderAmt) > 100 -- Doesn't work

There are a couple of ways to deal with this.

First, there’s the having clause, which specifically handles this requirement:

SELECT CustID, SUM(OrderAmt)

FROM Orders

GROUP BY CustID

HAVING SUM(OrderAmt) > 100

If the having clause doesn’t work for you, or you’d just prefer not to use it, you can also do some nested selecting and accomplish the same thing:

SELECT * FROM (

SELECT CustID, SUM(OrderAmt) AS OrderSum

FROM Orders

GROUP BY CustID

) AS OrderGrp

WHERE OrderGrp.OrderSum > 100

Find Duplicate Rows By Specific Columns

Consider a table, MyTable, with four columns: KeyCol, NameCol, IsActive, RowVersion. If you wanted to find all rows where NameCol and IsActive have the same values, you could do it as follows:

SELECT * FROM

(SELECT NameCol, IsActive, COUNT(*) AS DupCount

FROM MyTable

GROUP BY NameCol,IsActive) AS ResultSet

WHERE ResultSet.DupCount > 1

MySQL command line – quick tips

From Bash

Login (localhost access)

mysql -u <userid> -p

From the MySQL command prompt

List all databases on the current server

mysql> show databases;

Switch to a database

mysql> use <db name>;

Show all tables in the currently selected database

mysql> show tables;

View a table’s schema

mysql> describe <table name>;

Issue a select statement (example)

mysql> select * from <table name>;

Limit number of rows returned in a select

Note

TOP doesn’t work in MySQL…

mysql> select * from <table name> limit 0,10;

SQL Server in Linux

Installation

Server Control

Check status of SQL Server Service:

systemctl status mssql-server

Stop the SQL Server Service:

sudo systemctl stop mssql-server

Start the SQL Server Service:

sudo systemctl start mssql-server

Restart the SQL Server Service:

sudo systemctl restart mssql-server

Disable the SQL Server Service:

sudo systemctl stop mssql-server

sudo systemctl disable mssql-server

Enable the SQL Server Service:

sudo systemctl enable mssql-server

sudo systemctl start mssql-server

(You can get a script to simplify these commands here.)

Log Files

| Type | Location |

|---|---|

| SQL Server engine | /var/opt/mssql/log/errorlog |

| Installer | /var/opt/mssql/setup-< time stamp representing time of install> |

Log files are UTF-16 encoded. If needed (e.g., for ‘more’ or ‘less’ commands), you can encode in UTF-8 as follows:

sudo iconv –f UTF-16LE –t UTF-8 <errorlog> -o <output errorlog file>

SQL Transaction Template

BEGIN TRY

BEGIN TRANSACTION

-- Do stuff

COMMIT

END TRY

BEGIN CATCH

PRINT '[ERROR] ' + ERROR_MESSAGE();

PRINT '[SEVERITY] ' + CAST(ERROR_SEVERITY() AS VARCHAR);

PRINT '[STATE] ' + CAST(ERROR_STATE() AS VARCHAR);

ROLLBACK

END CATCH

Safe Way to Test

BEGIN TRY

BEGIN TRANSACTION

-- 1. SELECT statement to check the "pre" state.

-- 2. UPDATE to make the change.

-- 3. SELECT statement to check the "post" state.

ROLLBACK -- Change back to a COMMIT when you're ready for production.

END TRY

BEGIN CATCH

PRINT '[ERROR] ' + ERROR_MESSAGE();

PRINT '[SEVERITY] ' + CAST(ERROR_SEVERITY() AS VARCHAR);

PRINT '[STATE] ' + CAST(ERROR_STATE() AS VARCHAR);

ROLLBACK

END CATCH

Docker

Docker In Linux

Tested in Ubuntu.

Installation

This is a summarization of the full article found here.

I usually add a repo to the package manager for stuff like this, but I decided to use a standalone .deb file this time.

Since Linux Mint 19 is built on Ubuntu 18.04 (Bionic Beaver), I downloaded the latest .deb file from here.

After installation, the Docker daemon starts automatically.

Tip

If you don’t want to require ‘sudo’ with every docker command, add your userid to the ‘docker’ group.

Verify the Docker installation with the following command:

sudo docker run hello-world

If the Docker installation is valid, the hello-world image will be downloaded from Docker Hub, and will run. It will print out an informational message similar to this:

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

(amd64)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/engine/userguide/

…and then exit.

Manage Containers

You can display a list of all your installed images and their associated containers with this command:

sudo docker ps -a

The results list will look something like this:

| CONTAINER ID | IMAGE | COMMAND | CREATED | STATUS | PORTS | NAMES |

|---|---|---|---|---|---|---|

| 3467bc449fe9 | hello-world | “/hello” | 24 hours ago | Exited (0) 24 hours ago | friendly_haibt | |

| 890e2533976a | hello-world | “/hello” | 25 hours ago | Exited (0) 25 hours ago | angry_agnesi |

If you’d like to remove a container, use the docker rm command. For example, if I wanted to remove the friendly_haibt container for the hello-world image, I’d do this:

sudo docker rm friendly_haibt

Then, when I run the docker ps command again, I’ll see this:

| CONTAINER ID | IMAGE | COMMAND | CREATED | STATUS | PORTS | NAMES |

|---|---|---|---|---|---|---|

| 890e2533976a | hello-world | “/hello” | 25 hours ago | Exited (0) 25 hours ago | angry_agnesi |

Important

After you remove a persistent container, all data associated with the container is also removed, and is not recoverable. Be careful!

Dump log file for a container:

sudo docker log <container-name>

Stop a running container:

sudo docker stop <container-name>

Restart a stopped container:

sudo docker restart <container-name>

Example: BusyBox container

A very simple example using BusyBox UNIX tools:

sudo docker run -it --rm busybox

This command drops you into a sh shell in a BusyBox environment, and the container is automatically removed when you exit.

Helper Script For Container Examples

#!/usr/bin/python3

import os

import sys

class CDockerMgr:

dockerCmd = 'sudo docker'

def __init__(self):

pass

def ExecuteBaseCommand(self, currentCommand):

if currentCommand == 'status':

self.ExecDocker("ps -a")

else:

self.ShowHelp()

def ExecuteContainerCommand(self, currentContainer, currentCommand):

if currentContainer == 'sql1':

if currentCommand == 'start':

self.ExecDocker("restart {0}".format(currentContainer))

elif currentCommand == 'stop':

self.ExecDocker("stop {0}".format(currentContainer))

elif currentCommand == 'bash':

self.ExecDocker("exec -it {0} 'bash'".format(currentContainer))

else:

self.ShowHelp()

elif currentContainer == 'busybox':

if currentCommand == 'run':

self.ExecDocker("run -it --rm {0}".format(currentContainer))

else:

self.ShowHelp()

else:

self.ShowHelp()

def ExecDocker(self, args):

fullCmd = "{0} {1}".format(self.dockerCmd, args)

os.system(fullCmd)

exit(0)

def ShowHelp(self):

print("USAGE:")

print("\tdockit <container> <command>")

print("")

print("Valid containers and commands:")

print("\tstatus")

print("\tsql1")

print("\t\tstart")

print("\t\tstop")

print("\t\tbash")

print("\tbusybox")

print("\t\trun")

exit(0)

myDockerMgr = CDockerMgr()

if len(sys.argv) == 2:

myDockerMgr.ExecuteBaseCommand(sys.argv[1])

elif len(sys.argv) == 3:

myDockerMgr.ExecuteContainerCommand(sys.argv[1], sys.argv[2])

else:

myDockerMgr.ShowHelp()

MongoDB Quick Start in Docker

These instructions configure a server instance named mongo-test, running MongoDB version 4.4.0 in a basic Ubuntu Bionic image. My host machine is running Ubuntu. I’m assuming you’ve already installed Docker. If not, you might want to check out this article.

Tip

You’ll probably need to sudo your docker commands.

Basics

Start a server instance:

docker run -p 27017:27017 --name mongo-test -d mongo:4.4.0-bionic

Important

The port mapping (-p 27017:27017) is important. It allows you to connect to the running instance from your host machine.

A running instance can stopped with this:

docker stop mongo-test

And then started (or restarted) with this:

docker restart mongo-test

Open a bash shell in the running instance:

docker exec -it mongo-test bash

View MongoDB log files for the running instance:

docker logs mongo-test

Running Mongo Shell

You can run an interactive Mongo Shell a couple of ways.

Inside the running instance

First, open a bash shell inside the instance:

docker exec -it mongo-test bash

Then, run Mongo Shell:

mongo

From the host machine

First, install the MongoDB client tools:

sudo apt install mongodb-clients

Then, you can do this:

mongo --host localhost

Using Mongo Shell

List Databases

> show dbs

admin 0.000GB

config 0.000GB

local 0.000GB

Use Database

MongoDB doesn’t provide an explicit command for creating databases. A database will automatically be created the first time you try to use it (and add data).

Use a database called ‘testdb’:

> use testdb

switched to db testdb

In its simplest form, a database in MongoDB consists of two items:

- A document, which contains data, and,

- A collection, which is a container of documents.

A document is a data structure composed of field and value pairs. It’s a JSON object that MongoDB stores on disk in binary (BSON) format.

Drop Database

If you need to drop a database that’s already been created, you switch to it (‘use’), then issue a dropDatabase command:

> show dbs

admin 0.000GB

config 0.000GB

local 0.000GB

testdb 0.000GB

> use testdb

switched to db testdb

> db.dropDatabase()

{ "dropped" : "testdb", "ok" : 1 }

> show dbs

admin 0.000GB

config 0.000GB

local 0.000GB

Add Data

Let’s add some data documents to testdb, in a collection called ‘people’:

> use testdb

switched to db testdb

> db.people.insert( {firstName: 'John', lastName: 'Smith'} )

WriteResult({ "nInserted" : 1 })

> db.people.insert( {firstName: 'Bob', lastName: 'Jones'} )

WriteResult({ "nInserted" : 1 })

> db.people.find()

{ "_id" : ObjectId("5f4bc4dd54e2c67896143097"), "firstName" : "John", "lastName" : "Smith" }

{ "_id" : ObjectId("5f4bc4e354e2c67896143098"), "firstName" : "Bob", "lastName" : "Jones" }

The data in the insert commands is formatted as JSON, but quotes around key names are not required, and data can be single-quoted:

{

firstName: 'John',

lastName: 'Smith'

}

Update Data

To modify existing data, you pass two sets of data to update(): a filter, and an update action. The filter locates the document, and the update action specifies the data to modify.

In this example, we’ll change the “Bob Jones” record to “Robert Jones”:

> db.people.find()

{ "_id" : ObjectId("5f4bc4dd54e2c67896143097"), "firstName" : "John", "lastName" : "Smith" }

{ "_id" : ObjectId("5f4bc4e354e2c67896143098"), "firstName" : "Bob", "lastName" : "Jones" }

> db.people.update({ firstName: "Bob", lastName: "Jones" }, { $set: {firstName: "Robert" } } )

WriteResult({ "nMatched" : 1, "nUpserted" : 0, "nModified" : 1 })

> db.people.find()

{ "_id" : ObjectId("5f4bc4dd54e2c67896143097"), "firstName" : "John", "lastName" : "Smith" }

{ "_id" : ObjectId("5f4bc4e354e2c67896143098"), "firstName" : "Robert", "lastName" : "Jones" }

Remove Data

To remove data, you pass a filter to remove(), specifying the document (or documents) you want to remove.

In this example, we’ll add a new document to the people collection, and then remove it.

> db.people.insert( { firstName: "To", lastName: "Remove" } )

WriteResult({ "nInserted" : 1 })

> db.people.find()

{ "_id" : ObjectId("5f4bc4dd54e2c67896143097"), "firstName" : "John", "lastName" : "Smith" }

{ "_id" : ObjectId("5f4bc4e354e2c67896143098"), "firstName" : "Robert", "lastName" : "Jones" }

{ "_id" : ObjectId("5f4bf7595402b299ee512fd8"), "firstName" : "To", "lastName" : "Remove" }

> db.people.remove( { firstName: "To", lastName: "Remove"} )

WriteResult({ "nRemoved" : 1 })

> db.people.find()

{ "_id" : ObjectId("5f4bc4dd54e2c67896143097"), "firstName" : "John", "lastName" : "Smith" }

{ "_id" : ObjectId("5f4bc4e354e2c67896143098"), "firstName" : "Robert", "lastName" : "Jones" }

Managing Collections

To see the collections in a database:

> use testdb

switched to db testdb

> show collections

people

You can also use getCollectionNames(), which returns results as BSON:

> db.getCollectionNames()

[ "people" ]

Add a collection explicitly with createCollection:

> show collections

people

> db.createCollection("things")

{ "ok" : 1 }

> show collections

people

things

Drop a collection:

> show collections

people

things

> db.things.drop()

true

> show collections

people

Count of documents in a collection:

> db.people.count()

2

Retrieving Data

We’ve already employed a simple find in our add/update/delete examples: db.<collection_name>.find().

Find also accepts two optional parameters:

- Query filter: Describes how to filter the results, similar to a WHERE clause in SQL.

- Projection: Specifies which key/values from the document we want to see.

A find with no arguments retrieves up to the first 20 documents in a collection:

> db.people.find()

{ "_id" : ObjectId("5f4bc4dd54e2c67896143097"), "firstName" : "John", "lastName" : "Smith" }

{ "_id" : ObjectId("5f4bc4e354e2c67896143098"), "firstName" : "Robert", "lastName" : "Jones" }

A filter with an exact match on one key looks like this:

> db.people.find( {firstName: "John"} )

{ "_id" : ObjectId("5f4bc4dd54e2c67896143097"), "firstName" : "John", "lastName" : "Smith" }

Matching on multiple keys, similar to an AND in a SQL WHERE clause, looks like this:

> db.people.find(

... {

... $and: [

... { firstName: "John" },

... { lastName: "Smith" }

... ]

... });

{ "_id" : ObjectId("5f4bc4dd54e2c67896143097"), "firstName" : "John", "lastName" : "Smith" }

MongoDB supports the following query comparison operators: $eq, $gt, $gte, $lt, $lte, $ne, $in, and $nin, along with the following logical operators: $or, $and, $not, and $nor. Regex is also supported.

Projections can be used to limit the keys returned. For example, here’s how to return just the last names:

> db.people.find( { }, { _id: 0, lastName: 1 } );

{ "lastName" : "Smith" }

{ "lastName" : "Jones" }

The numeric values indicate whether to include (1) or exclude (0) a given field. The _id field is always returned, unless specifically excluded.

Results can also be sorted:

> db.people.find( { }, { } ).sort( { lastName: 1 });

{ "_id" : ObjectId("5f4bc4e354e2c67896143098"), "firstName" : "Robert", "lastName" : "Jones" }

{ "_id" : ObjectId("5f4bc4dd54e2c67896143097"), "firstName" : "John", "lastName" : "Smith" }

The numeric value controls whether to sort ascending (1) or descending (-1).

For large result sets, the number of results to return can be specified:

> db.people.find( { }, { } ).limit( 1 );

{ "_id" : ObjectId("5f4bc4dd54e2c67896143097"), "firstName" : "John", "lastName" : "Smith" }

MongoDB in .NET (C#)

Data in MongoDB can be accessed and manipulated in .NET (Standard and Core) applications using MongoDB.Driver. This is a simple introduction to connecting to MongoDB, retrieving data, and displaying it.

Create a .NET Core console application:

dotnet new console -o DotMongo

Add a reference to MongoDB.Driver:

cd DotMongo

dotnet add package MongoDB.Driver --version 2.11.1

Open Program.cs in your editor of choice, and replace the contents with this:

using System;

using MongoDB.Driver;

using MongoDB.Bson;

namespace DotMongo

{

class Program

{

static void Main(string[] args)

{

try

{

var databaseName = "testdb";

// Get database reference.

var mongoDatabase = GetDatabaseReference("localhost", 27017, databaseName);

Console.WriteLine($"Connected to database {databaseName}");

// Get a reference to the "people" collection inside testdb.

var collection = mongoDatabase.GetCollection<BsonDocument>("people");

// We're retrieving all documents in the collection,

// but we still need an empty filter.

var filter = new BsonDocument();

var count = 0;

// Open a cursor with all the matching documents.

using (var cursor = collection.FindSync<BsonDocument>(filter))

{

// Iterate through the cursor

while (cursor.MoveNext())

{

// Get documents at the current cursor location.

var batch = cursor.Current;

foreach (var document in batch)

{

// Get values from the current document, then display them.

var firstName = document.GetElement("firstName").Value.ToString();

var lastName = document.GetElement("lastName").Value.ToString();

Console.WriteLine($"Full name: {firstName} {lastName}");

count++;

}

}

}

Console.WriteLine($"Total records: {count}");

}

catch (Exception ex)

{

Console.WriteLine(ex.Message);

}

}

public static IMongoDatabase GetDatabaseReference(string hostName, int portNumber, string databaseName)

{

string connectionString = $"mongodb://{hostName}:{portNumber}";

// Connect to MongoDB

var mongoClient = new MongoClient(connectionString);

// Get a reference to the specified database

var mongoDatabase = mongoClient.GetDatabase(databaseName);

return mongoDatabase;

}

}

}

Run the application:

dotnet run

You should see output that looks like this:

Connected to database testdb

Full name: John Smith

Full name: Robert Jones

Total records: 2

You can find the full project here.

Learn More

MVS Turnkey in Docker

Assumes that you already have Docker installed and running. If you don’t, you can find instructions here.

Depending on your configuration, you may have to sudo your docker commands.

Pull Ubuntu Image

Pull the latest Ubuntu image:

docker pull ubuntu

Verify image:

docker image list

Results should look something like this:

REPOSITORY TAG IMAGE ID CREATED SIZE

ubuntu latest 597ce1600cf4 6 days ago 72.8MB

Create Container / Start a Shell

Create a container in the ubuntu image, and start a shell:

docker run --interactive --tty --name mvs_container ubuntu /bin/bash

(You can use any name you like. mvs_container is just an example.)

Get IP Address

Update your package list, install net-tools, run ifconfig, and note your IP address (you’ll need it later):

apt update

apt install net-tools

ifconfig

Retrieve the MVS Turnkey Archive

Change to /root, create a downloads directory, install wget, and retrieve the turnkey archive:

cd /root

mkdir downloads

cd downloads

apt install wget

wget https://wotho.ethz.ch/tk4-/tk4-_v1.00_current.zip

Install and Run MVS

Change to the /opt directory, create a mvs directory, install unzip, and then extract the turnkey archive into the mvs directory:

cd /opt

mkdir mvs

cd mvs

apt install unzip

unzip /root/downloads/tk4-_v1.00_current.zip

Turn on console mode:

cd unattended

./set_console_mode

cd ..

Start MVS:

./mvs

When the startup is complete:

You can open an instance of the x3270 terminal emulator, and connect to the running instance using the IP address you noted earlier. Detailed MVS operations instructions can be found here. (Just note that these instructions are for running on the Raspberry Pi, so adapt accordingly.)

After you complete your session and exit the container, you can return to it later:

docker start --interactive mvs_container

RabbitMQ in Docker

Supporting repo is here. (Includes a Celery example)

If you don’t already have Docker installed, you can find instructions here.

Setup and Run

Pull the RabbitMQ docker container:

docker pull rabbitmq

Startup for RabbitMQ docker container:

sudo docker run -d --hostname my-rabbit --name some-rabbit -p 5672:5672 rabbitmq:3

The port mapping (5672:5672) is not included in the instructions on Docker Hub, but it’s required for the Python send/receive scripts to work.

Simple Test in Python

You’ll need to install the Pika library before you run the send/receive scripts:

sudo pip3 install pika --upgrade

Python script to send a message:

send.py

#!/usr/bin/env python3

import pika

connection = pika.BlockingConnection(pika.ConnectionParameters('localhost'))

channel = connection.channel()

channel.queue_declare(queue='hello')

channel.basic_publish(exchange='', routing_key='hello', body='Hello World!')

print(" [x] Sent 'Hello World!'")

connection.close()

Python script to receive messages:

receive.py

#!/usr/bin/env python3

import pika

connection = pika.BlockingConnection(pika.ConnectionParameters('localhost'))

channel = connection.channel()

channel.queue_declare(queue='hello')

def callback(ch, method, properties, body):

print(" [x] Received %r" % body)

channel.basic_consume(queue='hello', on_message_callback=callback, auto_ack=True)

print(' [*] Waiting for messages. To exit press CTRL+C')

channel.start_consuming()

Simple Test in Go

First, install amqp using go get:

go get github.com/streadway/amqp

Then, use this to send a message:

send.go

package main

import (

"log"

"github.com/streadway/amqp"

)

func failOnError(err error, msg string) {

if err != nil {

log.Fatalf("%s: %s", msg, err)

}

}

func main() {

conn, err := amqp.Dial("amqp://guest:guest@localhost:5672/")

failOnError(err, "Failed to connect to RabbitMQ")

defer conn.Close()

ch, err := conn.Channel()

failOnError(err, "Failed to open a channel")

defer ch.Close()

q, err := ch.QueueDeclare(

"hello", // name

false, // durable

false, // delete when unused

false, // exclusive

false, // no-wait

nil, // arguments

)

failOnError(err, "Failed to declare a queue")

body := "Hello World!"

err = ch.Publish(

"", // exchange

q.Name, // routing key

false, // mandatory

false, // immediate

amqp.Publishing{

ContentType: "text/plain",

Body: []byte(body),

})

log.Printf(" [x] Sent %s", body)

failOnError(err, "Failed to publish a message")

}

And use this to receive messages:

receive.go

package main

import (

"log"

"github.com/streadway/amqp"

)

func failOnError(err error, msg string) {

if err != nil {

log.Fatalf("%s: %s", msg, err)

}

}

func main() {

conn, err := amqp.Dial("amqp://guest:guest@localhost:5672/")

failOnError(err, "Failed to connect to RabbitMQ")

defer conn.Close()

ch, err := conn.Channel()

failOnError(err, "Failed to open a channel")

defer ch.Close()

q, err := ch.QueueDeclare(

"hello", // name

false, // durable

false, // delete when unused

false, // exclusive

false, // no-wait

nil, // arguments

)

failOnError(err, "Failed to declare a queue")

msgs, err := ch.Consume(

q.Name, // queue

"", // consumer

true, // auto-ack

false, // exclusive

false, // no-local

false, // no-wait

nil, // args

)

failOnError(err, "Failed to register a consumer")

forever := make(chan bool)

go func() {

for d := range msgs {

log.Printf("Received a message: %s", d.Body)

}

}()

log.Printf(" [*] Waiting for messages. To exit press CTRL+C")

<-forever

}

SQL Server in Docker

This is a summarization of the full article found here.

Setup

Get the SQL Server 2017 image from Docker Hub:

sudo docker pull mcr.microsoft.com/mssql/server:2017-latest

Start up the container image, and init SQL Server setup:

sudo docker run -e "ACCEPT_EULA=Y" -e "MSSQL_SA_PASSWORD=<YourStrong@Passw0rd>" \

-p 1433:1433 --name sql1 --hostname sql1 \

-d \

mcr.microsoft.com/mssql/server:2017-latest

Important

The password must follow SQL Server default password policy, or the setup will fail: at least 8 characters long, containing characters from three of the following four sets: Uppercase letters, Lowercase letters, Base 10 digits, and Symbols.

Note

By default, this creates a container with the Developer edition of SQL Server 2017.

Detailed description of parameters from the previous example:

| Parameter | Description |

|---|---|

| -e ‘ACCEPT_EULA=Y’ | Set the ACCEPT_EULA variable to any value to confirm your acceptance of the End-User Licensing Agreement. Required setting for the SQL Server image. |

| -e ‘MSSQL_SA_PASSWORD=’ | Specify your own strong password that is at least 8 characters and meets the SQL Server password requirements. Required setting for the SQL Server image. |

| -p 1433:1433 | Map a TCP port on the host environment (first value) with a TCP port in the container (second value). In this example, SQL Server is listening on TCP 1433 in the container and this is exposed to the port, 1433, on the host. |

| –name sql1 | Specify a custom name for the container rather than a randomly generated one. If you run more than one container, you cannot reuse this same name. |

| microsoft/mssql-server-linux:2017-latest | The SQL Server 2017 Linux container image. |

Check the status of your Docker containers:

sudo docker ps -a

Change the SA password

After initial setup, the MSSQL_SA_PASSWORD environment variable you specified is discoverable by running echo $MSSQL_SA_PASSWORD in the container. For security purposes, change your SA password.

Use docker exec to run sqlcmd to change the password using Transact-SQL.

sudo docker exec -it sql1 /opt/mssql-tools/bin/sqlcmd \

-S localhost -U SA \

-P "$(read -sp "Enter current SA password: "; echo "${REPLY}")" \

-Q "ALTER LOGIN SA WITH PASSWORD=\"$(read -sp "Enter new SA password: "; echo "${REPLY}")\""

Connect to SQL Server

Start an interactive bash shell inside your running container. In the following example sql1 is name specified by the –name parameter when you created the container.

sudo docker exec -it sql1 "bash"

Once inside the container, connect locally with sqlcmd. Sqlcmd is not in the path by default, so you have to specify the full path.

/opt/mssql-tools/bin/sqlcmd -S localhost -U SA -P '<YourNewStrong!Passw0rd>'

After running SqlCmd, you can do normal database stuff: create databases, query data, etc.

Connect from outside the container

You can also connect to the SQL Server instance on your Docker machine from any external Linux, Windows, or macOS tool that supports SQL connections.

The following steps use sqlcmd outside of your container to connect to SQL Server running in the container. These steps assume that you already have the SQL Server command-line tools installed outside of your container. The same principals apply when using other tools, but the process of connecting is unique to each tool.

Find the IP address for the machine that hosts your container. On Linux, use ifconfig or ip addr. On Windows, use ipconfig.

Run sqlcmd specifying the IP address and the port mapped to port 1433 in your container. In this example, that is the same port, 1433, on the host machine. If you specified a different mapped port on the host machine, you would use it here.

sqlcmd -S <ip_address>,1433 -U SA -P '<YourNewStrong!Passw0rd>'

Embedded and IoT

.NET IoT

.NET Internet of Things (IoT) applications

Develop apps for IoT devices with the .NET IoT Libraries

dotnet/iot - This repo includes .NET Core implementations for various IoT boards, chips, displays and PCBs.

Process real-time IoT data streams with Azure Stream Analytics

Knowledge Base

Simple Raspberry Pi Control With .NET IoT and Python

Specific Devices

Quickstart - Use .NET to drive a Raspberry Pi Sense HAT

6502 Programming in C

What is the 6502?

From Wikipedia:

The MOS Technology 6502 (typically pronounced “sixty-five-oh-two”) is an 8-bit microprocessor that was designed by a small team led by Chuck Peddle for MOS Technology. The design team had formerly worked at Motorola on the Motorola 6800 project; the 6502 is essentially a simplified, less expensive and faster version of that design.

When it was introduced in 1975, the 6502 was the least expensive microprocessor on the market by a considerable margin. It initially sold for less than one-sixth the cost of competing designs from larger companies, such as the 6800 or Intel 8080. Its introduction caused rapid decreases in pricing across the entire processor market. Along with the Zilog Z80, it sparked a series of projects that resulted in the home computer revolution of the early 1980s.

Popular video game consoles and home computers of the 1980s and early 1990s, such as the Atari 2600, Atari 8-bit computers, Apple II, Nintendo Entertainment System, Commodore 64, Atari Lynx, BBC Micro and others, use the 6502 or variations of the basic design. Soon after the 6502’s introduction, MOS Technology was purchased outright by Commodore International, who continued to sell the microprocessor and licenses to other manufacturers. In the early days of the 6502, it was second-sourced by Rockwell and Synertek, and later licensed to other companies.

In 1981, the Western Design Center started development of a CMOS version, the 65C02. This continues to be widely used in embedded systems, with estimated production volumes in the hundreds of millions.

Installation

This assumes a Debian-based system.

sudo apt install cc65 cc65-doc

The following programs are installed (descriptions taken from https://cc65.github.io/doc/):

| Program | Description |

|---|---|

| ar65 | Archiver for object files generated by ca65. It allows to create archives, add or remove modules from archives, and to extract modules from existing archives. |

| ca65 | Macro assembler for the 6502, 65C02, and 65816 CPUs. It is used as a companion assembler for the cc65 crosscompiler, but it may also be used as a standalone product. |

| cc65 | C compiler for 6502 targets. It supports several 6502-based home computers such as the Commodore and Atari machines, but it easily is retargetable. |

| chrcvt65 | Vector font converter. It is able to convert a foreign font into the native format. |

| cl65 | Compile & link utility for cc65, the 6502 C compiler. It was designed as a smart frontend for the C compiler (cc65), the assembler (ca65), the object file converter (co65), and the linker (ld65). |

| co65 | Object file conversion utility. It converts o65 object files into the native object file format used by the cc65 tool chain. Since o65 is the file format used by cc65 for loadable drivers, the co65 utility allows (among other things) to link drivers statically to the generated executables instead of loading them from disk. |

| da65 | 6502/65C02 disassembler that is able to read user-supplied information about its input data, for better results. The output is ready for feeding into ca65, the macro assembler supplied with the cc65 C compiler. |

| grc65 | A compiler that can create GEOS headers and menus for cc65-compiled programs. |

| ld65 | The linker combines object files into an executable file. ld65 is highly configurable and uses configuration files for high flexibility. |

| od65 | Object file dump utility. It is able to output most parts of ca65-generated object files in readable form. |

| sim65 | Simulator for 6502 and 65C02 CPUs. It allows to test target independent code. |

| sp65 | Sprite and bitmap utility that is part of the cc65 development suite. It is used to convert graphics and bitmaps into the target formats of the supported machines. |